Insight and analysis on the data center space from industry thought leaders.

Server Efficiency: Aligning Energy Use With WorkloadsServer Efficiency: Aligning Energy Use With Workloads

The role of energy proportionality in the energy efficient performance of a server's processor is important, writes Winston Saunders of Intel. Making server energy use more proportional to workload dramatically improves the efficiency at common data center workloads.

June 12, 2012

Winston Saunders has worked at Intel for nearly two decades in both manufacturing and product roles. He has worked in his current role leading server and data center efficiency initiatives since 2006. Winston is a graduate of UC Berkeley and the University of Washington. You can find him online at “Winston on Energy” on Twitter.

WSaunders

WINSTON SAUNDERSIntel

At Intel, we launched to great fanfare the newest Xeon E5-2600 series of server microprocessors this spring.

Of course, the performance of this series of microprocessors is world-record amazing. I wrote about some of the potential TCO advantages of this around the time of launch.

Energy Efficient Performance

The subject here is another leadership aspect - the role of energy proportionality in the energy efficient performance of Xeon E5-2600. Why is this important? As pointed out in the 2007 article by Luiz André Barroso and Urs Hölzle of Google, most servers operate at less than peak workload and hence at efficiencies well below theoretical optimum. Making server energy use more proportional to workload dramatically improves the efficiency at common data center workloads. The authors pointed to a server whose idle power is 10 percent of its maximum power as having greater than 80 percent of its full load efficiency above 30 percent utilization. Greater proportionality means greater efficiency at actual workloads.

So how have we done? Let's look at the trend in published SPECPower_ssj2008 data. The first chart below shows the evolution of SPECpower data for various systems representative of different processor generations for specific configurations and test conditions: 2006 , 2008, 2009, 2010, 2012.

compounded-growth-energy-sm

Click for larger image. Graphic courtesty of Intel.How does one read the graph? System workload is plotted along the X-axis (from active idle to a load point of 100% system capacity) and system power is plotted along the Y-axis. The curves for each server follow an intuitive progression; as system workload increases, power usage increases. The degree of that increase is related to the proportionality of the system. Note that higher performance is to the right, lower Power is down, and hence higher efficiency is to the lower right.

Increased Performance Over Generations

What’s first evident from the graph is the higher peak performance in each successive generation. There is a gain in “peak” energy efficiency inherent with performance increases in the systems (more “work”). This is the progression known colloquially as “Moore’s Law.” Note that the peak power of these systems is relatively constant at about 250 Watts.

However, the graph reveals an additional progression toward lower power at low utilization, i.e. toward delivering even higher gains in energy efficiency at actual data center workloads via “energy proportionality.” Assuming each system is run at the mid-load point, the average power dropped from a little over 200 Watts in 2006 to about 120 Watts in 2012. That’s a net power reduction of about 40 percent and, assuming $0.10/kWh energy costs and a PUE of 2.0, an operational cost saving of about $150/year. In addition, the work output capability at that load point increases over a factor of 10.

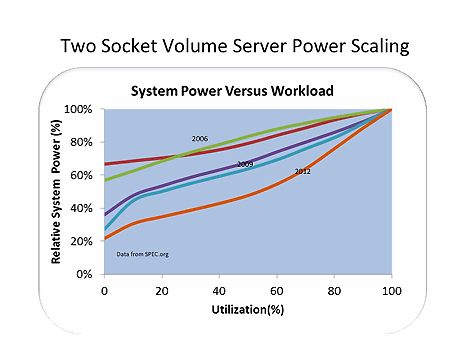

It’s notable that the big transitions happen primarily at what we call “architecture tocks” (rather than process technology “ticks.”) You can see this plainly in the graph below, which looks at the scaling of energy use against system workload relative to the peak. Without going into the specifics, this improvement can be understood simply by realizing the proportionality has a lot to do with “turning out the lights” when the workload leaves the room. That means architecture.

two-socket-volume-server-sm

Click for larger image. Graphic courtesty of Intel.The implication of these generations of architectural improvement is that efficiency gains, as measured by SPECPower, are greater than the performance increases alone. As the inset of the first graph shows, performance (as measured by 100 percent workload in SPECPower) has increased at a 45 percent compound annual growth rate (CAGR) while the efficiency (as measured by the SPECpower score) has increased at a much higher 60 percent CAGR.

So this brings us back to the original model proposed by Barroso and Hölzle in 2007. How have we done? Comparing the recently published result by Fujitsu to the model from the Google team shows how far power management has come.

energy-servers-sm

Click for larger image. Graphic courtesty of Intel.Although the Fujitsu/Xeon system does not quite achieve 10 percent idle power scaling of the model, by 30 percent relative workload the efficiency (which is what is really important) within single digit percentages of the model. Notably, for utilizations above 40 percent the Fujitsu/Xeon system exceeds the goal. The “better than linear” scaling is the result of the interplay of multiple technologies in the processor.

In conclusion, using SPECpower as a metric, two socket volume servers have made significant advances in energy efficiency with a compounded annual growth rate of about 60 percent. Per the results of the SPECpower benchmark in this test, since 2008, the performance has increased nearly a factor of 10 while energy use, as averaged by SPECPower, has dropped by 40 percent. That’s energy efficient performance!

Industry Perspectives is a content channel at Data Center Knowledge highlighting thought leadership in the data center arena. See our guidelines and submission process for information on participating. View previously published Industry Perspectives in our Knowledge Library.

About the Author

You May Also Like