AI Factories: Separating Hype From RealityAI Factories: Separating Hype From Reality

We examine the true potential of AI factories, exploring the costs and challenges of this emerging data center concept.

You’ve likely heard the term ‘AI factories’ thrown around, but what does it really mean? So far, the concept has been hyped more than defined – mostly by Nvidia. The company’s vision is data centers filled with high-end AI accelerators, but is that vision realistic, or just strategic marketing?

Simply put, an AI factory is a specialized data center designed for AI processing rather than traditional workloads like hosting databases, file storage, business applications, or web services. An AI factory is built around GPUs, which outperform CPUs in speed and power when handling AI workloads.

AI factories are facilities designed to process massive amounts of data for generative AI use, train AI models and generate AI outputs like text, images, videos, or audio content, and update AI systems and control other systems like robots or supercomputers.

Because GPUs run so hot and consume so much power, AI factories require more energy and cooling compared to traditional data centers. They are likely placed where energy is cheap and there is a ready supply of water for liquid cooling.

One example is Elon Musk’s xAI data center, which houses 100,000 Nvidia H100 GPUs for advanced AI processing. At an estimated $40,000 per GPU, that represents an investment of over $4 billion from a single customer – perhaps illustrating why Nvidia CEO Jensen Huang continues to champion the concept of AI factories.

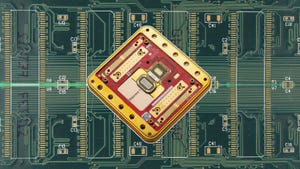

Inside an AI Factory: High-performance GPUs drive massive AI workloads, but can these facilities scale sustainably? Image: Alamy

AI Factories: Hype vs. Reality

While the concept is compelling, will we see this wave of AI factories that Jensen is promising? Probably not at scale. AI hardware is not only costly to acquire and operate, but it also doesn’t run continuously like a database server. Once a model is trained, it may not need updates for months, leaving this expensive infrastructure sitting idle.

For that reason, Alan Howard, senior analyst at Omdia specializing in infrastructure and data centers, believes most AI hardware deployments will occur in multipurpose data centers. These facilities will likely feature dedicated ‘AI zones’ alongside areas for standard compute and other workloads.

“It’s our feeling, really, that there will be some dedicated AI data centers, but unlikely it’s going to be as pervasive as we’re being led to believe,” Howard told Data Center Knowledge.

“If I have a 50,000 sq.ft data hall in a data center, and I have ample power, then I can create an area or a suite that can meet those really high power demands for a deployment of AI equipment. You’re not going to see very many data centers just full of AI gear… It’s going to be a part of a bigger data center.

Too Expensive for Most

Ram Palaniappan, chief technology officer with consultancy TEKsystems, agrees with the idea that dedicated AI data centers will remain limited, largely due to the high costs involved.

“Enterprises are doing lot more inference than actually training with their data,” he said. “If you can partition within your data center where some portions are dedicated to AI, you can use that GPU capacity for training the model, and then the remaining CPUs will be leveraged for inferencing the model. That’s how we are seeing how the data center world is tuning towards based on the enterprise consumption and the usage of the AI.”

Anthony Goonetilleke, group and head of strategy and technology for telecom digital transformation provider Amdocs, believes that many of these next-generation AI factories will become available for customers to lease through an AI-as-a-Service model, which major cloud service providers like Amazon Web Services offer.

“People are trying to build out AI factories to essentially create a model where they can sell AI capacity as a service, as some of our customers are looking to do,” Goonetilleke told Data Center Knowledge. “At the end of the day, think of it as Gen AI Infrastructure-as-a-Service. I think AI as a service has got a lot of potential upsides because the investment in AI hardware is enormously expensive, and in many cases, you may not need it again, or you may not need to use it as much.”

AI tech advances rapidly, and keeping up with the competition is prohibitively expensive, Palaniappan added. “When you start looking at how much each of these GPUs cost, and it gets outdated quite pretty quickly, that becomes bottleneck,” he said. “If you are trying to leverage a data center, you’re always looking for the latest chip in the in the facility, so many of these data centers are losing money because of these efforts.”

Don’t Forget the Network

In addition to the cost of the GPUs, significant investment is required for networking hardware, as all the GPUs need to communicate with each other efficiently. Tom Traugott, senior vice president of strategy at EdgeCore Digital Infrastructure, explains that in a typical eight-GPU Nvidia DGX system, the GPUs communicate via NVlink. However, to share data with other GPUs, they rely on Ethernet or InfiniBand, requiring substantial networking hardware to support the connection.

“When you’re doing a training run, it’s like individuals on a team,” Traugott said. “They’re all working on the same project, and they collectively come back together periodically and sort of trade notes.”

In smaller clusters, networking costs are similar to those of traditional data centers. However, in clusters with 5,000, 10,000, or 20,000 GPUs, networking can account for around 15% of the overall CapEx, he said. Multiple network connections are needed because the data sets are so enormous that a single NIC is easily saturated. To avoid bottlenecking, multiple NICs are needed – and the cost soon adds up.

“Apparently, that may be as high as 30% to 40% of the overall spend, which is disproportionate to prior generations,” Traugott told Data Center Knowledge. “So, from a data center standpoint, we may not see it right the power space cooling, if it’s all GPUs, there may be different densities.”

Read more of the latest AI data center news

The Future of AI Factories

This is still very new tech. There’s only one known AI factory currently in development – the xAI facility. Nvidia has only recently released blueprints for building AI factories, referred to as enterprise reference design, to help guide the building process. So much is subject to change, and some clarity is needed as the concept develops.

“So, is it going to be a small trend where there’s a handful of companies that do a handful of dedicated AI factories, or is it going to be bigger? My personal speculation is it will probably be about a year before we get a better bead on whether new data center construction has essentially a new face to it in the world of AI factories,” said Howard.

About the Author

You May Also Like