Nvidia CEO Jensen Huang and Mark Zuckerberg Tout Their Vision for AINvidia CEO Jensen Huang and Mark Zuckerberg Tout Their Vision for AI

At SIGGRAPH, Nvidia announces AI software advances to speed the development of generative AI applications while the two Silicon Valley leaders discuss their AI strategies, open source, personalized assistants and more.

.jpg?width=1280&auto=webp&quality=95&format=jpg&disable=upscale)

Nvidia CEO Jensen Huang and Meta CEO Mark Zuckerberg both see a future where every business uses AI. In fact, Huang believes jobs will change significantly because of generative AI – and it's already happening within his own company through AI assistants.

“It is very likely all of our jobs are going to be changed,” Huang said during a fireside chat that kicked off the SIGGRAPH 2024 conference in Denver on Monday (July 29). “My job is going to change. In the future, I'm going to be prompting a whole bunch of AIs. Everybody will have an AI that is an assistant. So will every single company, every single job within the company.”

For example, he said, Nvidia has embraced generative AI internally. Software programmers now have AI assistants that help them program. Software engineers use AI assistants to help them debug software, while the company also uses AI to assist with chip design, including its Hopper and next-generation Blackwell GPUs.

“None of the work that we do would be possible anymore without generative AI,” Huang said during a one-on-one chat with a journalist on Monday. “That’s increasingly our case – with our IT department helping our employees be more productive. It's increasingly the case with our supply chain team optimizing supply to be as efficient as possible, or our data center team using AI to manage the data centers (and) save as much energy as possible.”

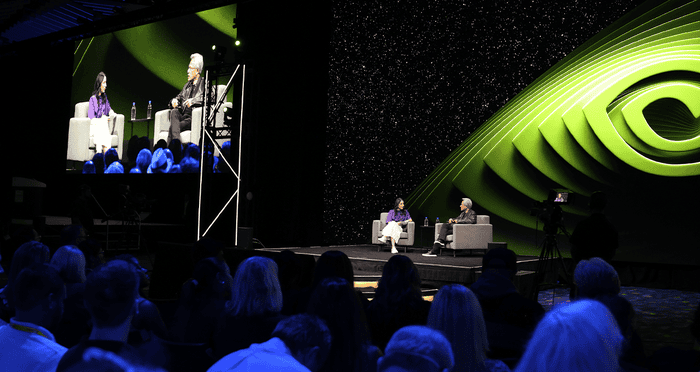

Nvidia CEO Jensen Huang and WIRED’s Lauren Goode at SIGGRAPH 2024. Credit: Nvidia

Later, during a separate one-on-one discussion between Huang and Zuckerberg, the head of Meta made his own prediction for business AI adoption: “In the future, just like every business has an email address and a website and a social media account – or several – I think in the future every business is going to have an (AI) agent that interfaces with their customers.”

J.P. Gownder, vice president and principal analyst at Forrester, agrees that generative AI is poised to majorly disrupt the workforce but cautions that companies must ensure employees possess sufficient levels of understanding and ethical awareness to effectively use GenAI in their jobs.

“Employees need training, resources, and support. Determining just how much assistance your employees will need is a key enablement priority and a prerequisite to success using GenAI tools,” Gownder said.

Nvidia’s annual SIGGRAPH conference is historically a computer graphics conference, but Huang on Monday said SIGGRAPH is now about computer graphics and generative AI. To help companies accelerate generative AI adoption, Nvidia on Monday introduced new advances to its Nvidia NIM microservices technology, part of the Nvidia AI Enterprise software platform.

NVIDIA NIM Microservices Help Speed Gen AI Deployment

First announced at the GTC conference in March, NIM microservices are a set of pre-built containers, standard APIs, domain-specific code and optimized inference engines that make it much faster and easier for enterprises to develop AI-powered enterprise applications and run AI models in the cloud, data centers or even GPU-accelerated workstations.

Nvidia enhanced its partnership with AI startup Hugging Face by introducing a new inference-as-a-service offering that allows developers on the Hugging Face platform to deploy large language models (LLM) using Nvidia NIM microservices running on Nvidia DGX Cloud, Nvidia’s AI supercomputer cloud service.

The 70-billion-parameter version of Meta’s Llama 3 LLM delivers up to five times higher throughput when accessed as a NIM on the new inferencing service when compared to a NIM-less, off-the-shelf deployment of Nvidia H100 Tensor Core GPU-powered systems, Nvidia said.

The new inferencing service complements Nvidia’s Hugging Face AI training service on DGX Cloud, which was announced at SIGGRAPH last year.

Nvidia on Monday also announced new OpenUSD-based NIM microservices on the Nvidia Omniverse platform to power the development of robotics and industrial digital twins. OpenUSD is a 3D framework that enables interoperability between software tools and data formats for building virtual worlds.

Overall, Nvidia announced more than 100 new NIM microservices, including digital biology NIMs for drug discovery and other scientific research, Nvidia executives said.

With Monday’s announcements, Nvidia is further productizing NIM microservices as a consumable solution across a wide array of use cases, said Bradley Shimmin, Omdia’s chief analyst for AI platforms, analytics and data management.

Earlier this year, Nvidia’s Huang described data centers as AI factories – and NIM microservices enable the assembly line approach to building and deploying AI applications and models, he said.

“Henry Ford was successful in creating an assembly line to assemble vehicles rapidly, and Jensen is talking about the same thing,” Shimmin said. “NIM microservices is basically having an assembly line-in-a-box. You don’t need a data scientist to start with a blank Jupyter Notebook, figure out what libraries you need and figure out the interdependencies between them. NIM greatly simplifies the process.”

Huang and Zuckerberg’s One-on-One Fireside Chat

Huang and Zuckerberg held a friendly one-hour fireside chat at SIGGRAPH. Meta is a huge Nvidia customer, installing about 600,000 Nvidia GPUs in its data centers, according to Huang.

During the discussion, Huang asked Zuckerberg about Meta’s AI strategy – and Zuckerberg discussed Meta’s Creator AI offering, which allows people to create AI versions of themselves, so they can engage with their fans.

“A lot of our vision is that we want to empower all the people who use our products to basically create agents for themselves, so whether that’s the many millions of creators that are on the platform or hundreds of millions of small businesses,” he said.

Meta has built AI Studio, a set of tools that allows creators to build AI versions of themselves that their community can interact with. The business version is in early alpha, but the company would like to allow customers to engage with businesses and get all their questions answered.

Zuckerberg said one of the top use cases for Meta AI is people role-playing difficult social situations. It could be a professional situation, where they want to ask their manager for a promotion or raise. Or they’re having a fight with a friend or a difficult situation with a girlfriend.

“Basically having a completely judgment-free zone where you can role play and see how the conversation would go and get feedback on it,” Zuckerberg said.

Part of the goal with AI Studio is to allow people to interact with other types of AI, not just Meta AI or ChatGPT.

“It’s all part of this bigger view we have. That there shouldn’t just be kind of one big AI that people interact with. We just think that the world will be better and more interesting if there’s a diversity of these different things,” he said.

Big picture, Zuckerberg said he expects organizations will be using multiple AI models, including large commercial AI models and custom-built ones.

“One of the big questions is, in the future, to what extent are people just using the kind of the bigger, more sophisticated models versus just training their own models for the uses they have,” he said. “I would bet that they’re going to be just a vast proliferation of different models.”

During the discussion, Huang asked why he open sourced Llama 3.1. Zuckerberg said it’s a good business strategy and enables Meta to build a robust ecosystem for the LLM.

Nvidia’s AI Strategy, Gen AI and Energy Usage of Data Centers

During the chat with Zuckerberg, Huang reiterated his desire to have AI assistants for every engineer and software developer in his company. The productivity and efficiency gains make the investment worth it, he said.

For example, pretend that AI for chip design costs $10 an hour, Huang said. “If you’re using it constantly, and you’re sharing that AI across a whole bunch of engineers, it doesn’t cost very much. We pay the engineers a lot of money, and so to us a few dollars an hour (that) amplifies the capabilities of somebody – that’s really valuable.”

During the fireside chat with the journalist Monday, Huang said generative AI is the new way of developing software.

The journalist asked Huang why he was so optimistic that generative AI can be more controllable, accurate and provide high-quality output with no hallucinations. He cited three reasons: reinforcement learning with human feedback, which uses human feedback to improve models, guardrails and retrieval augmented generation (RAG), which bases results on more authoritative content, such as an organization’s own data.

Huang was asked about the fact that generative AI uses a massive amount of energy and whether there is enough energy in the world to meet the demands of what Nvidia wants to build and accomplish with AI.

Huang answered yes. His reasons include the fact that the forthcoming Blackwell GPUs accelerate applications while using the same amount of energy. Organizations should move their apps to accelerated processors, so they optimize energy usage, he said.

About the Author

You May Also Like