Nvidia Shrinks the Deep Learning Data CenterNvidia Shrinks the Deep Learning Data Center

Claims new GPU-powered supercomputer in a box can replace hundreds of CPU nodes.

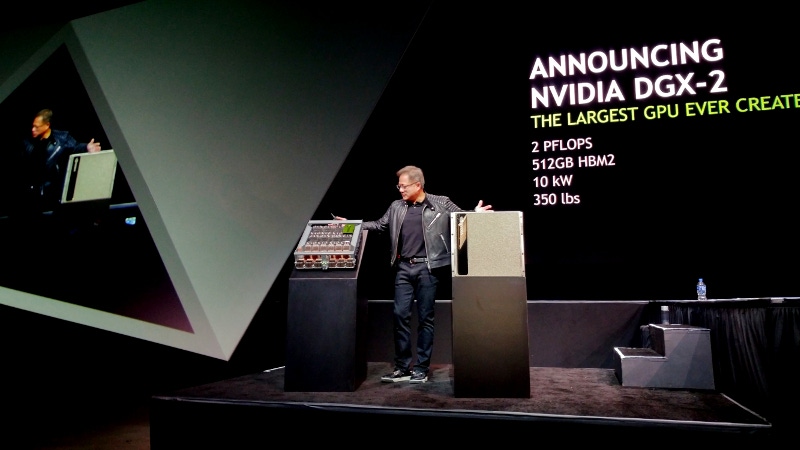

At its big annual Silicon Valley conference Tuesday, Nvidia unveiled what it said was the world’s first single server with enough computing muscle to deliver two petaflops, a level of performance usually delivered by hundreds of servers networked into clusters.

Aimed primarily at deep learning applications, the DGX-2 system is 10 times more powerful than the Volta GPU-powered version of its predecessor DGX-1, which was only released in September, the company said. The chipmaker’s engineers were able to get so much more performance in the box by packing it with twice the amount of GPUs, upgrading each GPU with twice as much memory as before, using a brand new GPU interconnection technology, and improving Nvidia deep learning software.

The Santa Clara, California-based company is the leading maker of GPUs, which accelerate computer graphics but also run inside machines used to train neural networks, a type of computing system that learns on its own by analyzing vast amounts of data. Neural networks power deep learning, the most widely used type of machine learning that’s driving today’s AI boom.

DGX-2, which Nvidia founder and CEO Jensen Huang announced at the GTC summit in San Jose, is the first system to use Nvidia’s new GPU interconnection technology, called NVSwitch, also announced at the summit. NVSwitch improves upon the previously existing GPU fabric NVLink by linking every one of the 16 GPUs inside the box to every other. It provides five times more bandwidth than the top PCIe switch on the market, the company said.

“This new switch can process and communicate NVLink across all the GPUs,” Ian Buck, VP and general manager of Nvidia’s Tesla Data Center business, said in a phone briefing with reporters.

"Every single GPU can communicate to every other single GPU at 20 times the bandwidth of PCI Express," Huang said in his GTC keynote. The switch transfers 300GB per second.

Also at the summit, the company announced that its top-shelf data center GPU, the Tesla V100, now ships with double the memory. The new 32GB GPUs are what’s powering Nvidia’s new supercomputer in a box.

“Combining all these new GPUs now with a fully switched NVLink fabric we can achieve the next level of AI systems,” Buck said.

Huang referred to the $399,000 DGX-2 as "the world's largest GPU."

A single DGX-2 box provides performance that’s “equivalent of 300 [Intel] Skylake servers,” Jim McHugh, Nvidia’s VP and general manager for Deep Learning Systems, said on the call. “That’s basically 15 racks of servers, which will save a lot” of space and power in the data center.

The Skylake cluster would come "easily for $3 million," Huang said.

GPU sales brought $8.14 billion in revenue in Nvidia’s fiscal 2018. Of that, $1.93 billion came from GPUs sold into data centers for deep learning and more traditional high-performance computing workloads – a 133 percent increase from the previous fiscal year, the company said. The other GPUs sold power things like gaming PCs and high-end desktops running professional visualization software, as well as cryptocurrency mining.

Cloud giants like Amazon Web Services, Microsoft Azure, and Google Cloud Platform are responsible for much of Nvidia’s data center GPU revenue; all top cloud service providers have deployed its GPUs to power their own AI applications, to give developers who use their cloud infrastructure services ways to build AI features into their own applications, and to provide raw GPU computing power as a service.

About the Author

You May Also Like