AMD Takes On Nvidia with New GPU for AIAMD Takes On Nvidia with New GPU for AI

AMD introduces two new data center processors for AI and HPC workloads with support from Microsoft Azure, Meta, Oracle Cloud, Dell, HPE and others.

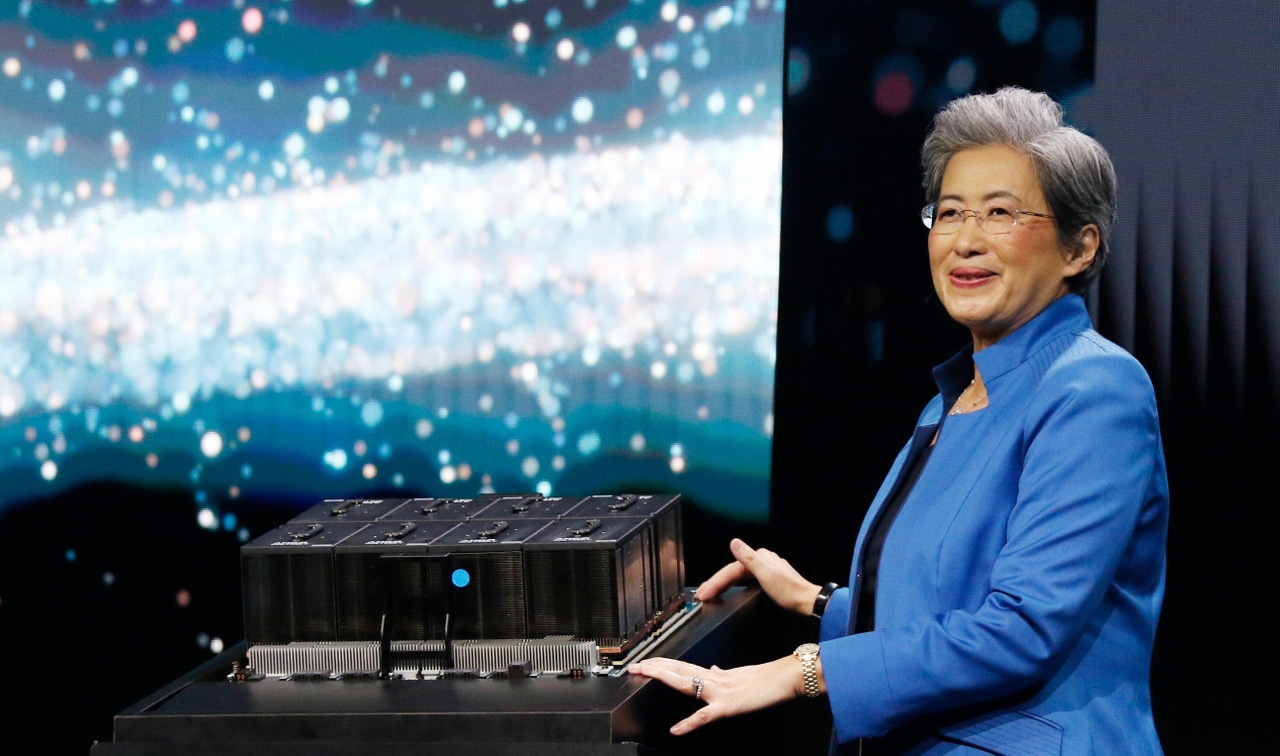

AMD today announced the availability of AMD Instinct MI300X, a new powerful data center GPU that the company hopes will grab market share from Nvidia in the lucrative AI chip market.

The MI300X GPU, designed for cloud providers and enterprises, is built for generative AI applications and outperforms Nvidia’s H100 GPU in two key metrics: memory capacity and memory bandwidth, which enables AMD to deliver comparable AI training performance and significantly higher AI inferencing performance, AMD executives said.

“It’s the highest performance accelerator in the world for generative AI,” said AMD CEO Lisa Su at the company’s “Advancing AI” event in San Jose, Calif. today.

AMD today also announced the availability of AMD Instinct MI300A, an integrated CPU and GPU chip that the company calls an accelerated processing unit (APU). It is designed for high-performance computing (HPC) and AI workloads, and is optimized for performance and energy-efficiency, the company said.

Analysts say AMD has introduced competitive AI chips and has good potential for making inroads into the market, particularly with Nvidia struggling to meet the enormous demand for its popular H100 GPU because of supply chain constraints. Nvidia currently dominates with more than 70% of the AI chip market, according to analyst firm Omdia.

“AMD is taking advantage of Nvidia’s inability to fully meet the needs of the market,” says Matt Kimball, vice president and principal analyst at Moor Insights & Strategy. “Nvidia can’t make them fast enough. They literally have backlogs that go quarters long of customers that want the H100. AMD comes along and says, ‘guess what? We have a competitive product.’ And AMD is doing it through better performance and what I believe will be better price/performance.”

AMD did not announce pricing for the MI300X. But the H100 often costs more than $30,000.

Kimball expects AMD will see success with the new MI300 Series processors – and so does AMD, which expects strong sales. During AMD’s third quarter earnings call on Oct. 31, Su said data center GPU revenue will exceed $2 billion in 2024 and that the MI300 line will be the fastest product to ramp to $1 billion in sales in AMD history.

Kimball said he doesn’t doubt that AMD will reach that sales goal “because there is such a hunger for GPUs for AI.”

“There are two factors going on: people don’t want to wait for Nvidia, and the industry doesn’t want to be locked into Nvidia,” he said. “So both these factors are going to accelerate the adoption of the MI300 among hyperscalers and within the enterprise.”

AI: Transformational, But Will Require Infrastructure Investments

During her speech today, Su said AI is the single most transformational technology over the past 50 years. “Maybe the only thing that has been close has been the introduction of the internet, but what’s different about AI is that the adoption rate is much, much faster,” she said.

Last year, AMD estimated that the data center AI accelerated market would grow 50% over the next few years, from $30 billion in 2023 to $150 billion in 2027. Today, she updated that prediction, saying the data center accelerator market will grow more than 70% annually over the next four years and reach more than $400 billion in 2027.

"The key to all of this is generative AI," Su said. "It requires a significant investment in new infrastructure. And that's to enable training and all of the inference that's needed. And that market is just huge.”

More Details on the MI300X and MI300A

The MI300 Series of processors, which AMD calls “accelerators,” was first announced nearly six months ago when Su detailed the chipmaker’s strategy for AI computing in the data center.

AMD has built momentum since then. Today’s announcement that MI300X and MI300A are shipping and in production comes with widespread industry support from large cloud companies such as Meta, Microsoft Azure and Oracle Cloud and hardware makers such as Dell, Hewlett-Packard Enterprise, Lenovo and Supermicro.

The AMD Instinct MI300X GPU, powered by the new AMD CDNA 3 architecture, offers 192 GB of HBM3 memory capacity, which is 2.4 times more than Nvidia’s H100’s 80 GB of HBM3 memory capacity. The M1300X reaches 5.3 TB/s peak memory bandwidth, which is 1.6 times more than Nvidia’s H100’s 3.3 TB/s.

In a media briefing before today’s AMD event, Brad McCredie, AMD’s corporate vice president of data center GPU, said the MI300X GPU will still have more memory bandwidth and memory capacity than Nvidia’s next-generation H200 GPU, which is expected next year.

When Nvidia announced plans for the H200 in November, it said the H200 will feature 141 GB of HBM3 memory and 4.8 TB/s of memory bandwidth.

Moving forward, McCredie expects fierce competition between the two companies on the GPU front.

“We do not expect (Nvidia) to stand still. We know how to go fast, too,” he said. “Nvidia has accelerated their roadmap once, recently. We fully expect them to keep pushing hard. We’re going to keep pushing hard, so we fully expect to stay ahead. They may leapfrog. We’ll leapfrog back.”

The AMD Instinct MI300A APU, built with AMD CDNA 3 GPU cores and the latest AMD Zen 4 x86-based CPU cores, offers 128GB of HBM3 memory. When compared to the previous generation M250X processor, the MI300A offers 1.9 times more performance per watt on HPC and AI workloads, the company said.

“At the node level, the MI300A has twice the HPC performance-per-watt of the nearest competitor,” said Forrest Norrod, executive vice president and general manager of AMD’s data center solutions business group, during today’s AMD event. “Customers can thus fit more nodes in their overall facility power budget and better support their sustainability goals.”

The Energy Department’s Lawrence Livermore National Laboratory is using MI300A APUs to build its El Capitan supercomputer, which will surpass speeds of more than 2 exaflops per second and will become the nation’s third exascale supercomputer when it debuts in 2024.

AMD Beefs Up Software Ecosystem

To increase adoption of the new MI300 Series chips, AMD today announced enhancements to its software ecosystem. The company announced ROCm 6, the latest version of its open-source software stack. ROCm 6 – which is a layer of software for GPUs to support computational workloads – has been optimized for generative AI, particularly large language models (LLMs), the company said.

ROCm 6 software, paired with MI300 Series accelerators, will deliver an eight times performance improvement when compared with ROCm 5 and the previous generation MI250 accelerator, the company said.

Cloud and Hardware Maker Support

During the AMD event, executives from hyperscalers and hardware vendors announced plans to include the MI300 Series accelerators in their offerings.

For example, Microsoft today said its new Azure virtual machine offering powered by MI300X is available in preview today. Oracle Cloud Infrastructure plans to offer MI300X-based bare metal instances for AI. And Meta said it is adding MI300X to its data centers to power AI inferencing workloads.

On the hardware front, Dell unveiled the Dell PowerEdge XE9680 server, featuring eight MI300 Series accelerators. HPE, Lenovo and Supermicro also announced it will support MI300 Series accelerators in its product portfolios.

About the Author

You May Also Like