Application SLAs in the Cloud: A Big Swindle?Application SLAs in the Cloud: A Big Swindle?

Availability SLAs promising uptime of 99% or more might seem attractive, but they can be misleading. Learn how to navigate the pitfalls of cloud service agreements.

When you explain to your CIO how cloud service-level agreements (SLAs) work, make sure your story does not unfold like The IT Crowd. In this fictional British television series, CEO Denholm Reynholm’s secretary tells him the police would be arriving due to a fraud investigation. Denholm then goes to the window, opens it, and jumps from the top floor.

So be warned: You might learn in the following that the SLAs you promise your stakeholders might not be backed adequately by your cloud providers’ SLAs. But first, let us start with the basics.

On SLAs and Architects

Business processes come to a halt in most organizations when critical IT systems and applications are down. Thus, ensuring availability is a primary task for CIOs and is made measurable with service-level agreements (SLAs). Availability SLAs usually contain three elements:

a percentage such as 99.9%

the relevant measurement period, typically one month, and

some fine print, e.g., that the SLAs do not apply in case of natural disasters

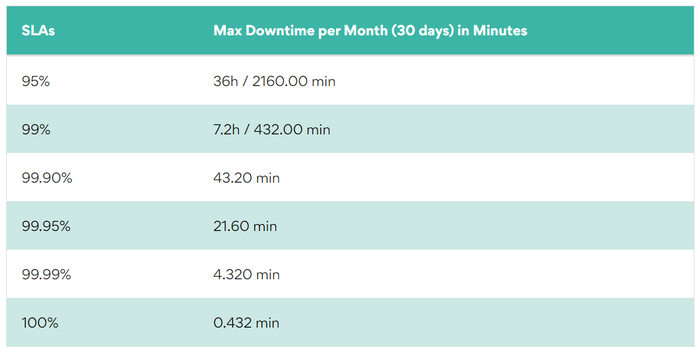

As Table 1 illustrates below, a 99.9% – a typical SLA for cloud services – translates to around 43 minutes of maximum downtime in a month.

Table 1: SLAs and max downtimes

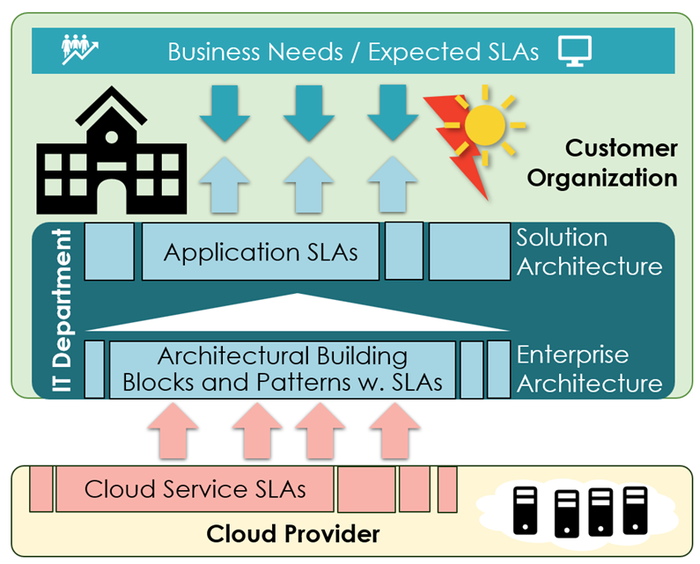

It is up to the architects to design solutions that meet the SLAs required by the business while building on cloud services with defined SLAs. Larger organizations split this task between different stakeholders:

Enterprise architects standardize architectural building blocks, e.g., typical (cloud) service configurations and backup patterns and availability features to be used by solution architects.

Solution architects integrate business logic and cloud building blocks into applications, plus choose the interaction patterns with other applications.

The complete sequence of the SLAs should ultimately fit together: cloud SLAs, architectural building block SLAs, and application SLAs that should match the SLA expectations of the business (Figure 1). And basic math helps to verify that.

Figure 1: The sequence from cloud to application and business SLAs

Simple Design Patterns and Some SLA Math

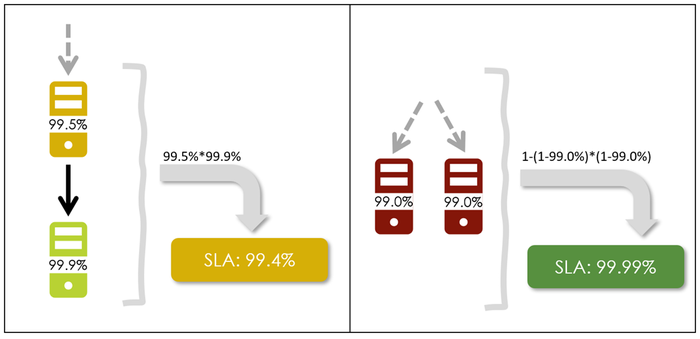

Calculating a solution’s overall SLA based on the components’ availability SLAs is straightforward. If components form a chain – think application layer VM and database server – or depend otherwise on each other, multiply the individual SLAs.

For example, a 99.5% application layer VM interacting together with a 99.9% database server has a combined SLA of 99.4% (Figure 2, left).

Figure 2: Calculating SLAs for chained and redundant components

When the SLA of a component (or subsystem) is insufficient, having two or more of them perform the same task in parallel boosts the SLA tremendously. Two VMs with a low 99.0% SLA (>7h max downtime in a month) in parallel result in a combined SLA of 99.99% (Figure 2, right).

This equated to four minutes’ maximum downtime instead of seven hours – not bad. Just note that this doubles the VM costs since both VMs need the capacity to run the complete workload in case the other fails.

Calculating More Complex SLAs

SLAs for complex solution architectures are easy to calculate with the two basic SLA rules introduced before.

Figure 3 (below) shows a typical solution design for web service with an application (layer) logic and the database layer. Both layers consist of two VMs with a 99.0% SLA. So, two redundant VMs with a 99.0% SLA result in a 99.99% availability SLA for each layer, the application and database layer. In addition, there is a Firewall/Load Balancer/Web Application Firewall layer with an assumed 99.5% SLA, resulting in an overall SLA of 99.48%.

Figure 3: SLA calculation for more complex solutions

The two key learnings from this example are: First, redundancy boosts any SLA dramatically. Second, one layer with a bad SLA ruins everything – no matter how brilliant the rest is. So, understand the SLAs of central (self-hosted or cloud-provided) components such as firewalls in detail!

SLA Reality Check in the Cloud

While math is always correct, our reality might not be good enough to match the implicit assumptions underlying these mathematical calculations.

Challenge 1: Mismatch of Measurement Periods

Assume a cloud service has a 99.9% 24/7 monthly SLA. If the business expects a 99.9% availability during business hours only, the 99.9% cloud SLA is inefficient. This is counterintuitive but true.

A 99.9% SLA for 35 business hours per week (assuming a month equals 4.3 four weeks) means the SLA applies to 35 hours per week * 60 min * 4.3 weeks/month = 9,030 min.

A 99.9% SLA for 9,030 minutes allows a maximum monthly downtime of 35 * 60 * 4.3 *0.001 = 8.4 min. Such a maximum monthly downtime equals a 99.98% SLA on a 24/7 base. “Business hours” SLAs lower staff costs but are pure horror for SLAs!

Challenge #2: Application Logic Impact on SLAs

A good application design prevents negative user impact during short unavailabilities of backend systems. ATMs, for example, allow withdrawing money even if the network is down (with limitations, obviously).

With such application architectures, the availability of the application layer matters, while we can ignore, for example, a (short) loss of internet connectivity or database outages. Good news for the CIO, though a nightmare for architects having to incorporate such nuances in SLA calculations.

Challenge #3: Independent vs. Dependent Events

The third challenge requires some basic statistics. The mathematical models assume component failures to be independent events. Today, VM 42 fails; tomorrow, VM 92. Outages are individual “acts of god” not related to other outages.

This assumption is often incorrect. For example, all cloud VMs crash if the underlying hardware fails. The VM crashes have a common root cause and are in “statistics language,” not independent.

Challenge #4: Unfavorable Cloud SLAs

The cloud providers market themselves as highly reliability and 99.9% or 99.99% SLAs (or even more 9s), but too often, their fine print nullifies the value of their SLAs. Some fine print “highlights:"

Downtimes below one minute do not count

Connectivity matters for VM availability, but no statement about whether and what runs on the VM

Higher VM SLAs require running the application redundantly on two VMs (in different data centers) – a concept legacy applications might not support

SLAs referring to an instance pool but not to each individual instance

If IT managers only give guarantees backed by cloud vendor SLAs, they could often state only SLAs, such as, “Our air traffic control system might fail frequently, but no outage is longer than one minute.”

Thus, they might have to promise and deliver SLAs only partially backed by cloud provider SLAs. But do not fool yourself into thinking that on-premises data centers are effectively better just because they promise a higher SLA. These promises might be backed with historically measured uptime rates and a superior data center design – or the provider just hopes for the best and incorporates expected penalties into their calculation.

So, my advice for the cloud is: If the business starts questioning SLAs, do not follow Denholm Reynholm’s approach and jump out of the window. It won’t help the company. Your successor could not do better. The SLA mess in the cloud is, in my opinion, an inconvenient reality for the foreseeable future.

About the Author

You May Also Like