Understanding Application Containers and OS-Level VirtualizationUnderstanding Application Containers and OS-Level Virtualization

Application container platforms like Docker are enabling creation of better distributed systems

Let’s imagine for a minute that you have a commonly used virtual hosting environment. Within that environment you have to securely segment your physical resources between lots of users. The users must be segmented on the virtual environment and have their own “virtual space.”

Now, to manage these users and their respective resources, you deploy a powerful tool that allows the kernel of the operating system to have multiple user space instances, all isolated and segmented from each other. These user space instances, also known as containers, allow the user within the container to experience operations as if they’re working on their own dedicated server. The administrator with overall rights to these containers can then set policies around resource management, interoperability with other containers within the operating system, and required security parameters. From there, the same administrator can manage as well as monitor these containers and even set automation policies around dynamic load-balancing between various nodes.

All of this defines operating system-level virtualization.

Now let’s dive a bit further. Operating system-level virtualization is a great tool to create powerfully isolated multitenant environments. However, there are some new challenges organizations are facing when working with containers and operating system-level virtualization:

What if you have an extremely large number containers all requiring various virtual machine resources?

What if you need to better automate and control the deployment and management of these containers?

How do you create a container platform capable of running not only on a Linux server?

How do you deploy a solution capable of operating on premise, in a public or private cloud, and everywhere in between?

We can look at a very specific example of where application containers are making a powerful impact. Technologies like Docker are now adding a new level of abstraction as well as automation to the operating system-level virtualization platform running on Linux servers. Docker implements new kinds of isolation features using cgroups to allow isolated containers to run within their own Linux instance. When working with a large number of containers spanning a number of different nodes, this helps eliminate additional overhead from starting new virtual machines.

With all of this in mind, it’s important to understand that more organizations are deploying workloads built on Linux servers. These workloads are running large databases, mining operations, big data engines, and much more. Within these Linux kernels, container utilization is also increasing. Here are ways a platform like Docker can help:

Greater container controls. Application containers help abstract the operating system-level virtualization process. This gives administrators greater control over provisioning services, greater security and processes restriction, and even more intelligent resource isolation. The other big aspect is allowing and controlling how containers spanning various systems share resources.

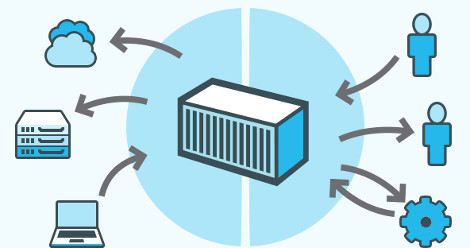

Creating distributed systems. Platforms like Docker allow administrators to manage containers, their tasks, running services, and other processes across a distributed, multi-node, system. When there is a large system in place, Docker allows for a “resource-on-demand” environment, where each node can obtain the resources they need, right when they need it. From there, you begin to integrate with systems requiring large amounts of scale and resources, such as MongoDB. With that in mind, big data platforms now span a number of different, highly distributed nodes. These nodes are located at a private data center, within public clouds, or at a service provider. How do you take your containers and integrate it with the cloud?

Integration with cloud and beyond. In June, Microsoft Azure added support for Docker containers on Linux VMs, enabling the broad ecosystem of “Dockerized” Linux applications to run within the Azure cloud. With even more cloud utilization, container systems using Docker can also be integrated with platforms like Chef, Puppet, OpenStack, and AWS. Even Red Hat recently announced plans to incorporate advanced Linux tools such as systemd and SELinux into Docker. All of these tools allow you to span your container system beyond your own data center. New capabilities allow you to create your own hybrid cloud container ecosystem spanning your data center and, for example, AWS.

Docker and other open source projects continue to abstract operating system-level virtualization and are allowing Linux-based workloads to be better distributed. Technologies like Docker help pave the way around container management and automation. In fact, as a realization that so many environments are running a heavy mix of Linux and Windows servers, Microsoft plans to integrate and support Docker containers on the next wave of Windows Server, thereby making available Docker open solutions across both Windows Server and Linux. If you’re working with a container-based solution running on a Linux server, start taking a look at how application containers can help evolve your ecosystem.

About the Author

You May Also Like