Data Center Liquid Cooling: Comparing the Top DesignsData Center Liquid Cooling: Comparing the Top Designs

Rear-door heat exchangers, direct-to-chip, induction cooling, immersion – here’s how they differ from each other

As compute density rises (however unevenly), one big question data center managers face is whether commodity CPUs, chipsets – and especially accelerator chips (your GPUs or FPGAs) – will drive heat density so far as to make liquid cooling as mandatory in the enterprise as it is in high-performance computing. Should enterprises start making their investment plans now?

That liquid is a better heat-transfer medium than air is indisputable. HPC centers worldwide depend on it today for applications where even forced-air cooling wouldn’t cut it for more than an hour. In many of these systems, chilled water is pumped into cold plates, which make direct contact with processors.

The everyday enterprise has been dipping its toes into the shallow end of the liquid cooling pool since around 2000, some buying racks with rear-door heat exchangers (RDHx). Thirteen years ago, as part of a contest, IBM engineers came up with a rear-door design that mounted onto existing racks, didn’t need fans, and leveraged a facility’s existing chilled-water plant. IBM was finally granted a patent on the design in 2010, and most passive exchanger components today appear to be derivatives of that model, although many chilled systems today add the fans back anyway. A decade-and-a-half is a long time for any technology to be called “new.”

Rear-Door Heat Exchangers

Perhaps, a May 2014 case study [PDF] by the Department of Energy’s Lawrence Berkeley National Laboratory spelled out the opportunity for RDHx best. Since the main source of heat in a server rack is closest to its rear door anyway, the reasoning went, that’s where heat extraction will be most efficient, “as air movement can be provided solely from the IT equipment fans. RDHx greatly reduces the requirement for additional cooling in the server room by cooling the exhaust from the racks.”

An additional possibility existed to increase the water temperature being pumped into the racks (chilling it less) and accomplish much the same results, they wrote.

A rear-door heat exchanger system in place at Lawrence Berkeley National Lab

But LBNL’s situation was different from your typical enterprise data center. With a battery of homogeneous, high-density blade servers, whose workloads were already distributed for maximum efficiency, and whose exhaust ports were all pointed the same way, the research data center was more like a trimmed estate garden than the jungle that is your everyday enterprise computing facility.

And that may be why some engineers are arguing now that the next step forward for liquid cooling in the enterprise data center — if such a step exists — is to bring the coolant into closer contact with the heat source.

The Case for Conduction

“If you are a forward-thinking data center operator, you should be thinking of liquid cooling today,” Bob Bolz, head of HPC and data center business development for conduction cooling systems maker Aquila Group, said in an interview with Data Center Knowledge.

Air cooling will not cease to exist at any point on his timeline. Indeed, his company very recently acquired airflow optimization system maker Upsite Technologies, resulting in a firm with deep stakes in the success of both liquid and air.

But, in Bolz’s experience, both Intel Xeon and AMD Epyc server-class processors are capable of generating heat in excess of 200F. As chip temperature rises linearly, the energy required to force enough air over it may rise exponentially — especially, Bolz said, in locations over 5,000 feet in altitude, such as Albuquerque (Aquila’s home base) or Denver.

“We’ve been fighting air cooling here for going on 20 years,” he said. “Fans failing, power supplies failing, things like that. It usually is because we’re pushing air and stressing components that are not as stressed at sea level.”

Aquila Aquarius cold plate conduction cooling

Aquila’s Aquarius is a conduction cooling system, designed for use in Open Compute Project-compliant racks. It relies on a relatively flexible, fixed, 23-inch by 24-inch stainless steel cold plate. A manifold delivers warm water (not chilled, not glycol-infused) through one side of this plate. Passive aluminum heat risers are installed on the CPU and other heat-generating components, such as GPUs, or FPGAs. Heat spreaders are then attached to draw heat from the risers, toward a flat plane at about the level of the tallest DIMM module — usually the highest point on the motherboard.

The cold plate is then pressed into contact at what Aquila calls the co-planarity point, resulting in a direct conductive path between chip surfaces and circulating water, which passes through an exhaust valve at the opposite side of the plate. From there, it may pass into the facility’s existing chiller plant, where it may be removed from the building, perhaps pumped through a cooling tower or a heat exchanger, letting natural evaporation reduce its temperature for recirculation.

Spot-On Cooling

Stamford, Connecticut-based CoolIT Systems utilizes a circulating liquid approach that is somewhat more localized, moving coolant to small plates installed directly on the chips themselves.

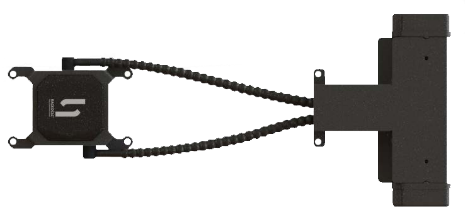

CoolIT’s connection between the coolant circulator and the CPU’s heat sink

CoolIT makes a closed-loop fluid circulator that cycles pre-filled liquid coolant through a circuit of flexible pipes [pictured above] into cold plates that are custom-fitted to specific chip sockets and chipset substrates.

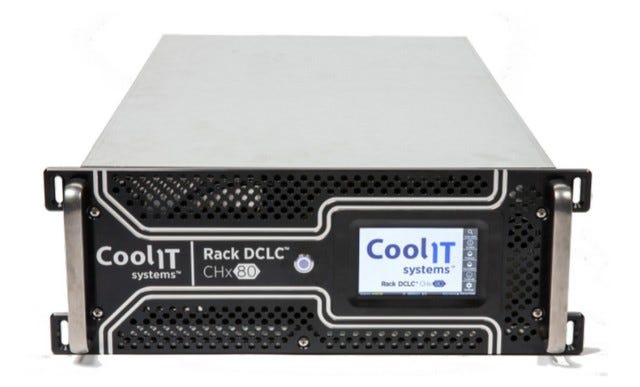

CoolIT’s heat exchanger unit

With the aid of radiators positioned away from the chip’s surface, CoolIT says, its direct-to-chip liquid cooling system (DCLC) transfers heat away from the hardware more effectively and more rapidly than air. Here, a liquid heat exchanger may be mounted either inside the rack as a discrete unit [pictured above], or as a stand-alone module outside the cabinet.

Here too, chilling water before it reaches the inlet point is not essential, and, as CoolIT VP of product marketing Patrick McGinn explained, may not be practical.

Overcoming the Liquid Obstacles

Joe Capes, director of global cooling business development for Schneider Electric, believes that no airflow-centric solution is capable of addressing the entirety of the heat problem in an enterprise data center.

In the more efficient of today’s air-cooled data centers water that carries heat away from the data center floor can be as warm as 68F, he said. In facilities using DCLC and other liquid cooling techniques, such as conduction and immersion, the coolant’s temperature can be close to double that.

This gives operators the option to use lower-energy dry coolers in place of evaporative heat rejection. The result, quite ironically, is that a fully economized liquid cooled data center may consume less water than one which relies on air cooling.

“A lot of data center owners and operators today are telling us they’re more concerned about water use than they are about [energy] efficiency, or at least they’re willing to sacrifice a degree of efficiency to save consumption of water,” Capes said.

Both conduction and DCLC would seek to capture heat from the few spots in modern servers where heat is generated the most: the specific chips where workloads are concentrated. Compared to a full-immersion system, such as Green Revolution Cooling, a conduction system moves cool temperatures to these chips without soaking them in the coolant directly.

One of the challenges DCLC vendors face concerns those last few feet between cabinet ingress and hot spots. CoolIT does produce models that are custom-fitted to specific brands of equipment, and partner manufacturers have reserved spots on their substrates for attaching pipe connections.

But that’s not the last, or the least, of these challenges.

Lars Strong, company science officer at Upsite, said different facilities deal with DCLC piping challenges in different ways. One is negative pressure pumping, which is forcing pipes to suck in air rather than squirt water in the event of a leak.

With Aquila’s design, such pipes don’t even enter inside the server, he noted. “The entire cabinet has just two connections: one in and one out. Everything else is soldered, sealed, and tested to much higher than operating pressure. So, it pretty much eliminates the potential for leaking within the cabinet.”

Liquid cooling designs have the potential to render an entire quarter-century of airflow standards and practices outmoded. Between now and that time, however, lay perhaps the most significant obstacle of all: a certain skittishness among operators and facilities managers who know what typically happens when water makes contact with electricity and who may not be quite ready to entrust their data centers’ safety and stability on a thin layer of cold metal.

But their reluctance would have them make do with more and more hot air.

About the Author

You May Also Like