Just Add Water: Augmenting Air-Cooled Data Centers with LiquidJust Add Water: Augmenting Air-Cooled Data Centers with Liquid

Seeing hot chips in the pipeline, OCP hyperscalers want to standardize retrofitting air-cooled servers with plumbing.

Before long, you won’t be able to cool server-class CPUs, GPUs, and other accelerators, such as FPGA, using airflow alone. That’s according to engineers from Microsoft, a company which just last year said liquid cooling was nowhere near practical for data centers.

“What we’re trying to do here is prepare our industry for the advent of liquid-cooled chips,” Husam Alissa, a senior engineer with Microsoft’s Data Center Advance development team, said in a presentation during the OCP Global Summit in May, held virtually. “They will require liquid cooling. So, our effort here is to align with OCP, and make the adaptation to liquid cooling more seamless.”

OCP, or Open Compute Project, is the effort Facebook, Goldman Sachs, and a few others launched in 2011 to wrestle control of the way their data center hardware and infrastructure are designed – and priced – away from companies that at the time were market “incumbents” (the likes of Dell, HP, or Cisco) and had gotten used to calling the shots.

Microsoft was just one of a plethora of OCP Summit participants — contributors to the OCP Advanced Cooling Solutions (ACS) sub-project — who managed to evolve their liquid-cooled projects from something resembling Project Mercury back before it was safe to launch astronauts to more like Apollo after three or four successful touchdowns. Since 2016, Microsoft has been working on Project Olympus, an effort to refine the chassis and form factors of servers built for a hyperscale cloud like Azure.

Now, OCP leaders like Microsoft and Facebook, and other ACS subgroup members, are focusing on new ways to maintain the thermal stability of processors, accelerators, and storage components.

The Future, on Tap

“Hybrid liquid cooling” retrofits typically air-cooled systems with appendages that deliver liquids (including H2O but recently also new classes of manufactured fluids, originally conceived for fire retardation) directly through flexible pipes and onto sealed enclosures around silicon chips. If what Alissa described soon comes to pass, a new class of flexible hot and cold piping, using “blind mate” (genderless one-snap) connections, will be channeled alongside Ethernet and electric cables.

On the incoming side of the circuit will be a dielectric fluid – not water, and not mineral oil. It will be piped to an enclosure surrounding critical components — perhaps a medium-sized box for the motherboard, perhaps just a small jewel case for individual chips. There, the fluid will come to a boil. Then the outgoing side of the circuit will pipe the vapor off to an enclosure that captures the heat, re-condenses the vapor back into fluid, and starts the whole process again.

Server racks already circulate air. It’s not practical, it turns out, to imagine converting them into appliances that circulate water instead. Unless you go the full-immersion route, airflow and the flow of fluid must coexist. Engineers contributing to OCP are looking for efficiencies, ways in which both flows benefit rather than obstruct one another: “air-assisted liquid cooling” (AALC).

They’re up against a clock they can hear ticking but can’t see. The warning Microsoft’s Alissa made appears no longer disputable: Soon, there will be chips that cannot be air-cooled. Had there not been a pandemic, their time of arrival would have been easier to estimate.

As Phillip Tuma, an application development specialist with 3M’s Electronics Materials Solutions Division, told OCP attendees, the emerging abundance of liquid cooling methods can be sorted using four characteristics (for a theoretical total of 31 varieties):

Heat transfer mode. A single-phase system relies on natural factors, such as the fact that heat rises, to remove heat from an area, whereas a two-phase system typically integrates a mechanism that divides the liquid flow into intake and outflow.

Convection mode. In a two-phase system, this determines whether the impetus for fluid flow is an active mechanism, such as a pump, or a passive one, such as a cold plate.

Containment method. In a full-immersion system (find our latest deep dive – Ha! – on immersion here), all the servers or server chassis in a cluster are enclosed in a single tank. Alternatively, a single server can be enclosed in a sealed clamshell. Or, in an AALC scheme, a sealed half-shell can encase a single chip, either equipped with a passive heatsink or coated with a kind of ceramic (not silver paste) that promotes boiling, much like a street surface on a hot summer day.

Fluid chemistry. A hydrocarbon fluid is like a mineral oil but highly refined and purified. By contrast, a fluorocarbon fluid is manufactured, like a fire retardant, and doesn’t behave like an oil. Both classes have lower boiling points than water, and both require constant filtering to remove impurities, like water in an aquarium.

Methane is a hydrocarbon, and as everyone with an outdoor barbecue knows, it’s highly flammable. At room temperature, it’s a gas, which disqualifies it from use in immersion. What makes the hydrocarbons used to produce cooling oils safer than methane or hexane (merely “combustible”), said Tuma, is having carbon numbers of 10 or greater. “Going up from there you eventually run into oils that are simply too viscous to be pumpable and useful for immersion,” he remarked.

Case Studies

For hybrid AACS systems to be certified in data center environments, they will need to be classified. To that end, Intel engineer Jessica Gullbrand offered a fairly simple scheme. It begins with a “hybrid basic” component cooling configuration: primarily the introduction of water-infused cold plates to an air-cooled frame, contacting only the high-power components, such as GPUs or CPUs. A “hybrid intermediate” model adds cold plate contact to DIMMs. Here is where airflow starts to be significantly reduced, Gullbrand warned, and where the liquid cooling method must compensate in order to be effective.

Cold plates contacting other equipment, such as storage arrays, would qualify the system as “hybrid advanced.” Once airflow is completely restricted or no longer plays a role in cooling, the system can’t be classified as “hybrid.” Racks may be classified separately, according to Intel’s contribution to proposals, depending on whether they include heat exchangers in the front or rear.

How much of this classification scheme is for data center engineers and certification professionals, and how much will be for risk-management professionals? Could insurance adjustors, for example, recalculate risk using a variety of new variables, such as the probability of leakage?

We put the question to Intel’s Gullbrand. “We haven’t specified in the [requirements] documents specifically what needs to be recorded in regards to risk management,” she responded. “We have mentioned a few things that need to be considered. What do you do, for example, if you have an accident or a leakage? If there’s a spill, how would you handle that? To have those processes in place, of course, before something is happening.”

So, there may end up being a risk-management section in the OCP’s final requirements documents for components like cold plates “to make sure people are thinking the solutions through,” Gullbrand said.

Hybrid Basic and Intermediate

The simplest hybrid cooling configuration, as envisioned by Microsoft and Facebook data center engineers, begins with the attachment of a heat exchanger (HX) to the rear of the rack. It’s a bolt-on component that includes a series of five 280mm fans generating up to 4,800 cubic feet per minute (CFM) of airflow. It would also include temperature and air pressure monitors, and on-board sensors.

ocp liquid 2 fulton HX_0

As Alissa explained, a reservoir and pumping unit (RPU) takes the place of some airflow components in a rack, often those installed on the bottom. It’s separated from the HX, making the whole system more modular and easier to maintain and repair. Alternatively, if space is available, the HX can be installed in the rack itself.

“Therefore, the liquid-to-air portion of this solution becomes flexible and can be optimized for performance or the end use case,” Alissa said. “And the RPU, being the hub of the solution, can be standardized and optimized, and also be designed for interoperability to enable multiple solution providers to support this product.”

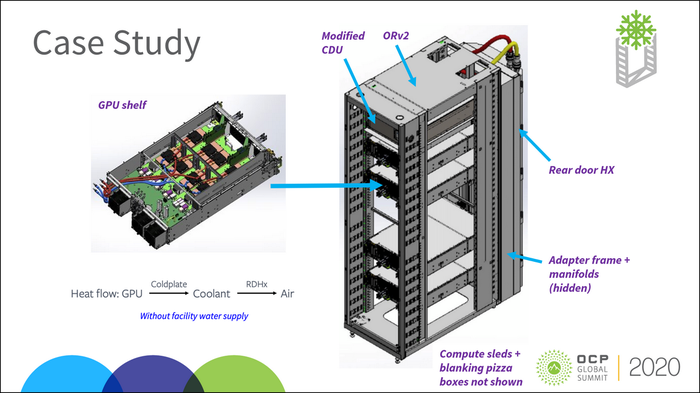

The engineers of Calgary-based CoolIT Systems are largely responsible for the design of the RPU, which, according to the company’s hyperscale solutions director Cam Turner, was derived from a case study involving its CHX80 rack-based liquid-to-liquid cooling distribution unit (CDU). There, a rack was cooled using a variety of cold plates making direct contact with hot components and infused with liquid through the addition of a closed liquid loop.

ocp liquid 2 fulton case study

For the new case study (depicted above), heat absorbed by the cold plates was exhausted by the HX after passing through the air current being delivered to the other components in the system in the normal fashion. The CHX80 was first optimized for narrowest OCP Open Rack 19-inch form factor, with an optional adapter plate attachment for larger sizes.

“To support interoperability between vendors, standardization for electrical and liquid interfaces is required,” explained Turner, saying something that in three years’ time may very well go without saying. “This includes not only the type of connector but also the locations, just to make sure there is no crashing with any other components in the system, and when you swap out components, you don’t run short on cable or tube length.”

It’s still an air-cooled rack, so conceiving how to retrofit an existing rack isn’t such a stretch. And the liquid involved can be regular water, although it does not need to come directly from the facility water supply. Still, Fernandes told attendees, liquid makes all the difference.

“Extensive testing of this rack-level setup involving GPU sleds showed [that] for a given airflow rate, this closed-loop liquid cooling solution delivers much promise in the form of 50 percent higher power consumption support, as compared to the original air-cooled solution,” Facebook thermal engineer John Fernandes said. “However, further improvement in cooling components could push these projections even further.”

Hybrid Advanced

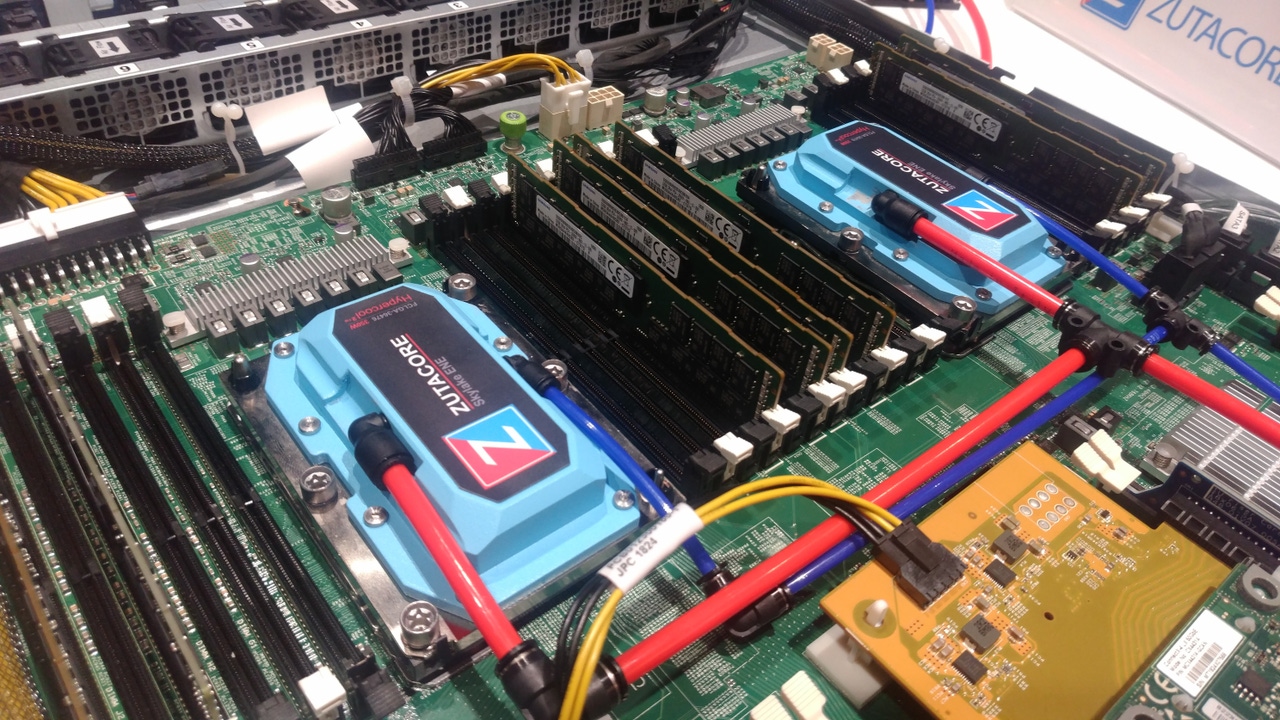

The demonstration that may have blown the doors off the entire OCP conference (along with any built-in heat exchangers) was delivered by way of a video from a company called ZutaCore.

The design, developed in partnership with rack maker Rittal, involves equipping the rear-door HX with a two-phase liquid infusion system that pumps dielectric fluid through a 4mm inlet tube into a chamber directly over the chip, whose heat sink has been removed. There, the fluid visibly boils, and vapor bubbles carry heat away through a 6mm outlet tube to a refrigerant-distribution manifold. From there, vapor is passed through to a heat rejection unit (HRU).

ocp liquid 2 fulton zutacore

“The HRU contains fans that blow cold-aisle air across a condenser, forcing the vapor to give up its heat and condense back into a liquid,” explained ZutaCore’s director of product management Timothy Shedd. “A pump then draws that liquid out and pushes it back to the evaporators, where the cycle begins again.”

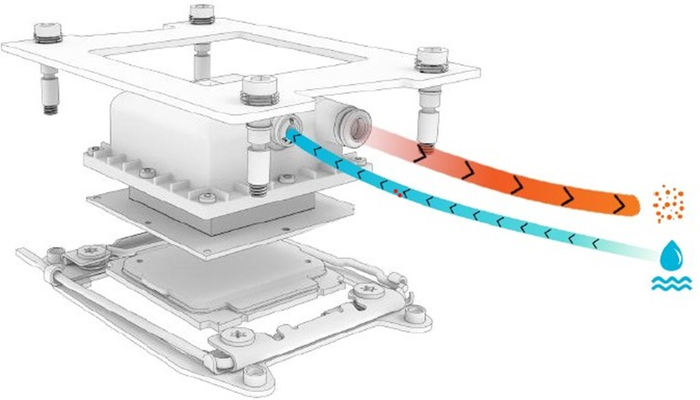

ocp liquid 2 fulton zutacore ENE

At the center of ZutaCore’s design is a part (pictured above) that replaces a chip’s heatsink. It’s called an “Enhanced Nucleation Evaporator,” or ENE. Cool liquid is pumped into a chamber, where heat from the chip brings it to a boil. That vapor is pulled through the evaporators into an air-cooled condenser. Because the chamber is so small, and the flow is so constant, Rittal claims, a liquid flow of just 140mm per minute is capable of cooling 400W.

It’s measurements such as this that are compelling engineers and scientists to advocate in favor of abandoning the metric with which the industry currently evaluates server and data center power, in the context of what’s required to cool them.

“This environment is completely different from an air-cooled environment,” stated Jimil Shah, an applications development engineer with 3M, whose myriad of products includes cooling liquids. “With air-cooled, you’ll be talking about rack density per square meter. But here, we are talking about kilowatts-per-liter.”

If that projection comes about as liquid cooling becomes inevitable (at least by Microsoft and Facebook’s reckoning), then here’s our new baseline, courtesy Rittal and ZutaCore: 0.35 kW/liter. That is if nucleation — a new word for the data center vernacular — could cool everything.

About the Author

You May Also Like