Amazon Web Services Unveils Custom Machine Learning Inference ChipAmazon Web Services Unveils Custom Machine Learning Inference Chip

Says it designed Inferentia because GPU makers have focused much attention on training but too little on inference

Turns out Amazon’s cloud business had not one but two custom-chip announcements queued up for re:Invent.

Three days after launching cloud server instances running on its own Arm chips, Amazon Web Services announced that it’s also designed its own processors for inference in machine learning applications. AWS CEO Andy Jassy announced the inference chip, called AWS Inferentia, during his Wednesday morning keynote at the cloud giant’s big annual conference in Las Vegas.

AWS competitor Google Cloud Platform announced its first custom machine learning chip, Tensor Processing Unit, in 2016. By that point, it had been running TPUs in its data centers for about one year, Google said.

Google is now on the third generation of TPU, which it has been offering to customers as a cloud service. Microsoft Azure, Amazon’s biggest rival in the cloud market, has yet to introduce its own processor. All three players offer Nvidia GPUs for machine learning workload acceleration as a service; AWS and Azure also offer FPGAs for machine learning.

Like Amazon's custom Arm processor, Inferentia was designed with the help of engineers from Annapurna, the Israeli startup Amazon acquired in 2015.

AWS is planning to offer Inferentia to customers, but it will be a very different offering from Google’s. Unlike TPU, which is designed for training machine learning models, Inferentia is designed for inference, which is the decision making a system does once it’s been trained. It’s the part that recognizes a cat in an image after the model has been trained by crunching through thousands of various tagged cat photos.

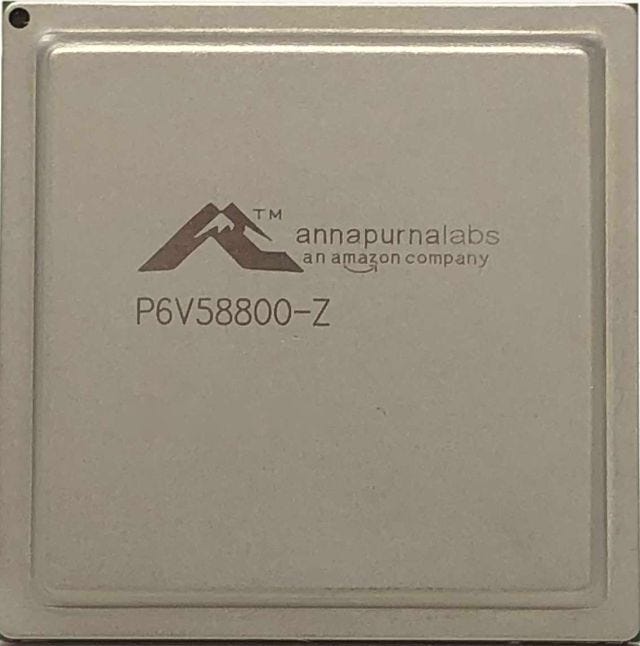

AWS's Inferentia processor for machine learning inference, built by Annapurna Labs (Source: AWS VP and distinguished engineer James Hamilton's Perspectives blog)

Companies making accelerator processors for machine learning – the biggest one being Nvidia – have focused most of their attention on optimizing processors for training, Jassy said. That’s why AWS decided it would focus on designing a better inference chip.

He did not share any details about the chip’s design or performance and didn’t say when it would become available to customers. On its website, the company said each Inferentia chip “provides hundreds of TOPS (tera operations per second) of inference throughput… For even more performance, multiple AWS Inferentia chips can be used together to drive thousands of TOPS of throughput.”

“It’ll be available for you on all the EC2 instance types as well as in SageMaker, and you’ll be able to use it with Elastic Inference,” Jassy said. SageMaker is an AWS managed service for building and training machine learning models.

Elastic Inference is a new AWS feature Jassy announced Wednesday. It gives users the ability to automatically scale their inference processor capacity deployed in the cloud based on their needs.

Automatic scaling hasn’t been available before with P3 instances – the most popular instances for machine learning on Amazon’s cloud, according to him. Without it, users have had to provision enough P3 capacity for peak demand, paying for unused capacity when their systems aren’t running at that peak.

That has resulted in average utilization of GPUs on P3 instances of 10 to 30 percent, Jassy said.

Elastic Inference can be attached to any EC2 instance in AWS and scale up or down as needed, potentially saving users a lot of money. It can detect the machine learning framework used by the customer, find pieces that would benefit most from hardware acceleration, and move them to an accelerator automatically, Jassy said.

For now, that accelerator will be an Nvidia GPU. Once Amazon adds the Inferentia option, it expects its own chip to result in additional “10x” cost improvement over GPUs used with Elastic Inference, according to Jassy.

About the Author

You May Also Like