Nvidia Launches Next-Generation Blackwell GPUs Amid AI ‘Arms Race’Nvidia Launches Next-Generation Blackwell GPUs Amid AI ‘Arms Race’

At GTC, Nvidia CEO Jensen Huang also showcased new AI supercomputers and AI software to power surging workloads.

Nvidia on Monday (March 18) announced its next-generation Blackwell architecture GPUs, new supercomputers, and new software that will make it faster and easier for enterprises to build and run generative AI and other energy-intensive applications.

The new family of Blackwell GPUs offers 20 petaflop of AI performance on a single GPU and will allow organizations to train AI models four times faster, improve AI inferencing performance by 30 times, and do it with up to 25 times better energy efficiency than Nvidia’s previous generation Hopper architecture chips, said Ian Buck, Nvidia’s vice president of hyperscale and HPC, in a media briefing.

GTC Product Showcase

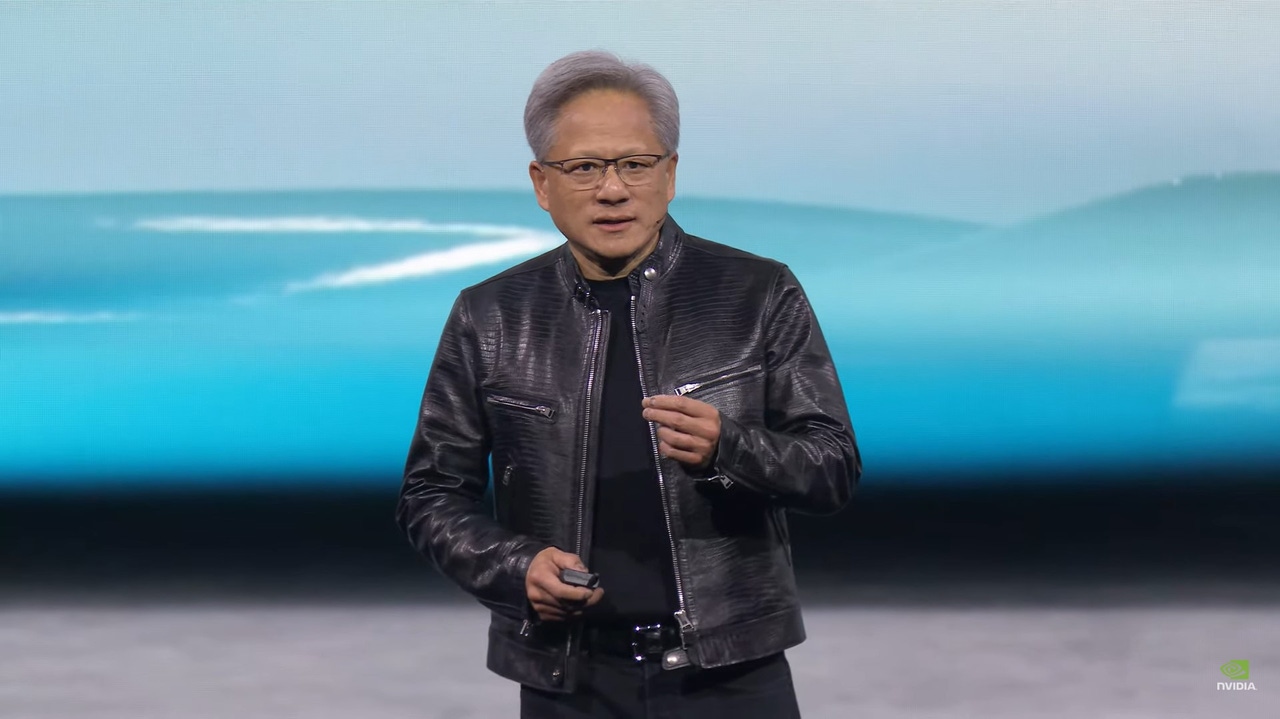

Nvidia CEO Jensen Huang unveiled Blackwell and other innovations during a keynote speech on Monday to kick off Nvidia’s 2024 GTC developer conference in San Jose, California.

“We created a processor for the generative AI era,” he told a packed crowd.

Blackwell unifies two dies into one GPU. It features 208 billion transistors and is twice the size of Nvidia’s Hopper GPUs, Huang said.

“The two dies think it’s one chip,” he said. “There’s 10 TB/s of data between it. These two sides of the Blackwell chip have no clue which side they’re on. There’s no memory locality issues. No cache issues. It’s just one giant chip, and so when we were told Blackwell’s ambitions were beyond the limits of physics, the engineers said, ‘So what?” And so this is what happened.”

New Blackwell GPUs include the Nvidia GB200 Grace Blackwell Superchip, which connects two new Nvidia Blackwell-based B200 GPUs and the existing Nvidia Grace CPU to deliver 40 petaflops of AI performance.

During his speech, Huang also announced new hardware: the Nvidia GB200 NVL72, a liquid-cooled, rack-scale server system that features 36 Grace Blackwell Superchips and serves as the foundation of forthcoming new Nvidia SuperPOD AI Supercomputers that will enable large, trillion-parameter-scale AI models.

Amazon Web Services, Google Cloud and Oracle Cloud Infrastructure will make GB200 NVL72 instances available on the Nvidia DGX Cloud AI supercomputer cloud service later this year, the company said.

Nvidia also released a new version of its AI software, AI Enterprise 5.0, which features new Nvidia ‘NIM’ microservices – a set of pre-built containers, standard APIs, domain-specific code, and optimized inference engines that make it much faster and easier for enterprises to develop AI-powered enterprise applications and run AI models in the cloud, data centers or even GPU-accelerated workstations.

NIM can cut deployment times from weeks to minutes, the company said.

“It’s the runtime you need to run your model in the most optimized, enterprise-grade, secure, compatible way, so you can just build your enterprise application,” said Manuvir Das, Nvidia’s vice president of enterprise computing, during a media briefing before the keynote.

Nvidia Seeks to Increase its AI Market Share Lead

Analysts say Nvidia’s latest announcements are significant, as the company aims to further bolster its leadership position in the lucrative AI chip market.

Nvidia CEO Jensen Huang holds up the new Blackwell GPU (left) and Nvidia's previous Hopper GPU (right).

GPUs and faster hardware are in high demand as enterprises, cloud providers, and other data center operators race to add more data center capacity to power AI and high-performance computing (HPC) workloads, such as computer-aided drug design and electronic design automation.

“These announcements shore up Nvidia’s leadership position, and it shows the company continues to innovate,” Jim McGregor, founder and principal analyst at Tirias Research, told Data Center Knowledge.

Matt Kimball, vice president and principal analyst at Moor Insights & Strategy, agrees, saying Nvidia continues to improve upon its AI ecosystem that spans everything from chips and hardware to frameworks and software. “The bigger story is that Nvidia drives the AI journey, from a software, tooling, and hardware perspective,” he said.

Intense Competition

Nvidia dominates the AI market with its GPUs capturing about 85% of the AI chip market, while other chipmakers – such as AMD, Intel, Google, AWS, Microsoft, Cerebras, and others – have captured 15%, according to Karl Freund, founder and principal analyst of Cambrian AI Research.

While startup Cerebras competes with Nvidia at the very high end of the market with its latest wafer-sized chip, AMD has become a significant competitor in the GPU space with the December release of its AMD Instinct MI300 GPUs, McGregor said.

Intel, for its part, currently has two AI chip offerings with its Intel Data Center GPU Max Series and Gaudi accelerators. Intel plans to consolidate its AI offerings into a single AI chip in 2025, McGregor said.

In its December launch, AMD claimed that its MI300 GPUs were faster than Nvidia’s H100 and forthcoming H200 GPU, which is expected during the second quarter of this year. But Blackwell will allow Nvidia to leapfrog AMD in performance, McGregor said. How much faster won’t be determined until benchmarks are released, Freund added.

But the Blackwell GPUs are set to provide a huge step forward in performance, Kimball said.

“This is not an incremental improvement, it’s leaps forward,” he said. “A 400% better training performance over Nvidia’s current generation of chips is absolutely incredible.”

Kimball expects AMD will answer back, however. “It’s a GPU arms race,” he said. “AMD has a very competitive offering with the MI300, and I would fully expect AMD will come out with another competitive offering here before too long.”

More Blackwell GPU Details

The Blackwell architecture GPUs will enable AI training and real-time inferencing of models that scale up to 10 trillion parameters, Nvidia executives said.

Today, there are very few models that reach the trillions of parameters, but that will begin to increase as models get larger and as the industry starts moving toward video models, McGregor of Tirias Research noted.

“The point is with generative AI, you need lots of processing, tons of memory bandwidth and you need to put it in a very efficient system,” McGregor said. “For Nvidia, it’s about continuing to scale up to meet the needs of these large language models.”

According to an Nvidia spokesperson, the Blackwell architecture GPUs will come in three configurations:

The HGX B200, which will deliver up to 144 petaflops of AI performance in an eight-GPU configuration. This supports x86-based generative AI platforms and supports networking speeds of up to 400 GB/s through Nvidia’s newly announced Quantum-X800 InfiniBand and Spectrum-X800 Ethernet networking switches.

The HGX B100, which will deliver up to 112 petaflops of AI performance in an eight-GPU baseboard and can be used to upgrade existing data center infrastructure running Nvidia Hopper systems.

The GB200 Grace Blackwell Superchip, which delivers 40 petaflops of AI performance and 900 GB/s of bidirectional bandwidth and can scale to up to 576 GPUs.

Nvidia said the Blackwell architecture features six innovative technologies that result in improved performance and better energy efficiency. That includes a fifth-generation NVLink, which delivers 1.8 TB/s bidirectional throughput per GPU and a second-generation transformer engine that can accelerate training and inferencing, the company said.

NVLink connects GPUs to GPUs, so the programmer sees one integrated GPU, while the second-generation transformer engine allows for more efficient processing through 4-bit floating point format, Freund said.

“It’s all part of the optimization story. It’s all about getting work done faster,” he said.

Huang touted GB200 NVL72’s performance and energy efficiency and the fact that the system offers 720 petaflops of performance for training – nearly one exaflop – in one rack.

For example, organizations can train a GPT 1.8 trillion parameter model in 90 days using 8,000 Hopper GPUs while using 15 MW of energy. With Blackwell, organizations could train the same model in the same amount of time using just 2,000 Blackwell GPUs, while using only 4 MW of power, he said.

“Our goal is to continuously drive down the cost and energy associated with computing, so we can continue to expand and scale up the computation that we have to do to train the next-generation models,” Huang said.

Blackwell Availability

Pricing of Blackwell GPUs has not been announced. Nvidia expects to start releasing Blackwell-based products from partners later this year.

Nvidia on Monday also announced two new DGX SuperPOD AI Supercomputers – the DGX GB200 and DGX B200 systems – which will be available from partners later this year, the company said.

Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro are among the hardware makers that plan to ship servers based on Blackwell GPUs, Nvidia said.

“The whole industry is gearing up for Blackwell,” Huang said.

Read more about:

Chip WatchAbout the Author

You May Also Like