Hypervisor 101: Understanding Server VirtualizationHypervisor 101: Understanding Server Virtualization

Server virtualization has transformed the data center industry. Here are the basic things you need to understand about the technology that enables it.

Even in their most basic form, virtualization technologies have transformed the data center at the core: servers, storage, networks. Here we’ll look specifically at the hypervisor, a tried-and-true technology that made server multi-tenancy (the ability for a single server to run multiple computing workloads as though they were running on multiple servers) for years.

First, here are some basic things to understand about the modern hypervisor:

At a very high level, hypervisor technologies represent intelligent pieces of software that allow us to abstract hardware resources and create multi-tenant server capabilities. These virtual servers (or services) are software-based virtual machines, or VMs, accessing underlying physical resources of the server, sharing those resources with each other.

Key definitions:

Type I Hypervisor. This type of hypervisor (pictured above) is deployed as a bare-metal installation. This means it is the first piece of software to be installed on the server. This is what will communicate directly with the underlying physical server hardware. Those resources are then paravirtualized and delivered to the running VMs. This is the preferred method for many production systems.

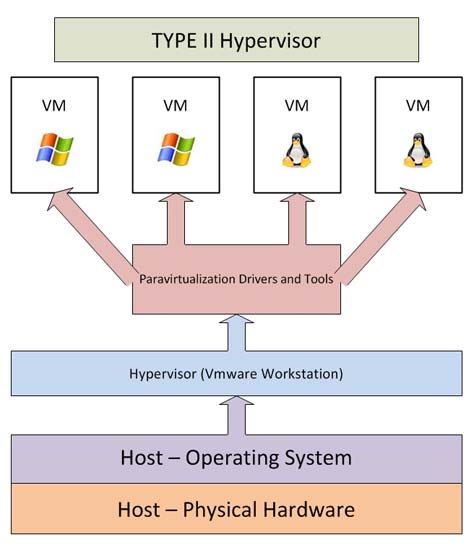

Type II Hypervisor. This model (shown below) is also known as a hosted hypervisor. The software is not installed on bare-metal, but loaded on top of an already live operating system. For example, a server running Windows Server 2008R2 can have VMware Workstation 8 installed on top of that OS. Although there is an extra layer of software the computing resources go through before they reach the VM, the additional latency is minimal, and with today’s software enhancements the hypervisor can still perform optimally.

Guest Machine. A guest machine, the VM, is the workload installed on top of the hypervisor. This can be a virtual appliance, operating system, or another type of virtualization-ready workload. This guest machine “believes” that it is its own unit with its own dedicated resources. This is how virtualization enables you to use a single physical machine to run multiple workloads. All this happens while resources are intelligently shared between the VMs.

Host Machine. This is the physical server, or host. Virtualization may encompass several components – SAN, LAN, wiring, and so on – but here we are focusing on the resources located on the physical server. The key ones are RAM and CPU, divided between VMs and distributed as the administrator sees fit. A machine needing more RAM (a domain controller for example) would receive that allocation, while a less important VM (a licensing server for instance) would have fewer resources. With today’s hypervisor technologies, many of these resources can be dynamically allocated.

Paravirtualization Tools. After the guest VM is installed on top of the hypervisor, a set of tools is usually installed on that VM. They provide a set of operations and drivers for the guest VM to run more optimally. For example, although natively installed drivers for a NIC will work, paravirtualized NIC drivers will communicate with the underlying physical layer much more efficiently and enable advanced networking configurations.

Containers. This technology is increasingly being used right on top of traditional hypervisors like VMware or XenServer or instead of them. Operating system-level virtualization is a great tool to create powerfully isolated multitenant environments. Container technologies, such as Docker,are now adding a new level of abstraction as well as automation to the OS-level virtualization platform running on Linux servers. Application containers help abstract the OS-level virtualization process. This gives administrators greater control over provisioning services, greater security and processes restriction, and even more intelligent resource isolation. This is big because you can, for example, run a Microsoft IIS container without having to dedicate an entire virtual machine or OS license. You’re literally running just the IIS container. Similarly, other vendors like Citrix and its NetScaler CPX can run a mini-ADC within a containerized environment.

More and more integration is happening. Microsoft Azure has added support for Docker containers on Linux VMs, enabling the broad ecosystem of “Dockerized” Linux applications to run within the Azure cloud. With even more cloud utilization, container systems using Docker can also be integrated with platforms like Chef, Puppet, OpenStack, and Amazon Web Services. Red Hat is in the mix too, incorporating advanced Linux tools such as systemd and SELinux into Docker.

All these tools allow you to span your container system beyond your own data center. New capabilities allow you to create your own hybrid cloud container ecosystem spanning your data center and, for example, AWS. Finally, major hypervisor vendors are offering advanced container services as well. This incudes the big three: VMware vSphere, Microsoft Hyper-V, and Citrix XenServer.

Now let’s look at what companies behind the three top hypervisors have been up to lately:

VMware vSphere

Released in April 2018, vSphere 6.7 builds on 6.5 (released one year earlier) and focuses on improving customer experience in terms of simplicity, efficiency, and speed.

The vCenter Server Appliance is now easier to use and manage and features major performance improvements over 6.5 – 2X operations per second, 3X reduction in memory usage, 3X faster DRS-related operations, according to VMware.

VMware has also shortened the time it takes to update physical hosts of ESXi, the Type I hypervisor. Instead of two reboots, an update now requires only one. The new vSphere Quick Boot feature restarts ESXi without rebooting the physical host.

There’s also now a modern, simplified HTML5-based graphical user interface.

VMware made many more major enhancements in the areas of security, support for many more types of workloads (HPC, machine learning, in-memory, cloud-native, etc.), and hybrid-cloud capabilities. You can read about them all in greater detail here.

Microsoft Hyper-V

Back Hyper-V first came out on Server 2008R2, it was a solid hypervisor technology. That said, it did lack some of the enterprise features that VMware carried. But it did work as a simple hypervisor with decent integration. Fast forward to the latest edition of Hyper-V on Windows Server 2016:

Backups, checkpoints, and recovery. There was a lot of feedback on this topic over the years. So, instead of relying on Volume Shadow Copy Services (VSS) system for sometimes shaky backups, Windows Server 2016 makes change tracking a feature of Hyper-V itself. This makes it much easier for third-party backup vendors to support it.

Rolling cluster upgrades. Basically, updating from 2012 to 2016 is made much easier.

Virtual machine (VM) compute resiliency. This brings in much greater abilities around VM resiliency. For example, if you lose a storage connection, a VM is paused instead of crashing. You can set thresholds, allow VMs to operate in isolated modes if you lose connection into a cluster, and even specify exactly when a failover should happen.

Storage Quality of Service (QoS). You now have an IOPS traffic signal helping you control and monitor IOPS used by VMs and services. Pretty cool.

Shielded VMs. Here, you’re talking about some powerful security measures. Shielded VMs use Microsoft’s Bitlocker encryption, Secure Boot and virtual TPM (Trusted Platform Module). Furthermore, it’ll require a new feature called the Host Guardian Service. The idea here is to mitigate host administrator access if a VM is in the cloud for example. When you set this up, a Shielded VM will only rub on the hosts you specifically designate. From there, the VM is encrypted, as is network traffic for features like Live Migration.

Windows and Hyper-V containers. The new OS from Microsoft supports both Windows Server Containers, which use shared OS files and memory, and Hyper-V containers, which have their own OS kernel files and memory.

What else is new in Hyper-V for Server 2016? Nano Servers! This is basically a stripped-down edition of Windows Server which is then optimized for hosting Hyper-V, running in a VM, or running a single application.

Citrix XenServer

As one of the leading open source hypervisors out there, XenServer continues to be a favorite for many. At the time of writing, the latest release was XenServer 7.5, which came out in May 2018.

The release focused to a great extent on better manageability and availability, more optimal storage management, and support for more use cases.

Citrix has quadrupled the XenServer pool size, from 16 to 64 hosts. That means users now have to manage fewer pools, have more flexibility with in-pool migration, storage sharing, and high availability.

On the storage front, the release added an experimental feature, enabling thin provisioning for block storage. Thin provisioning on SAN, achieved by introducing GFS2 Storage Repositories (GFS2 is a Linux-based shared-disk file system for block storage), should help users reduce storage costs, the company said. It allows them to allocate storage space as needed.

The new GFS2 Storage Repositories effectively increase the maximum size of virtual disks (from 2TB to 16TB), which in turn expands the number of possible use cases. One of them, for example, is supporting large databases.

As with vSphere 6.7, there are many more enhancements in XenServer 7.5, described in detail here.

Final Thoughts

When it comes to virtualization, there’s really no stopping innovation. Moving forward, even organizations that already have virtualization deployed will be leveraging new features enabling greater levels of consolidation, efficiency, and cloud integration. For your own environments, never stay complacent and always test out new features that can positively impact your entire data center.

About the Author

You May Also Like