What Has to Happen for Quantum Computing to Hit Mainstream?

Data Center World keynote: It's still early days for quantum computing, where the fundamental technology remains unsettled, and the nature of workloads is fuzzy.

August 23, 2021

If you stretch the timeline of quantum computing onto the timeline of IBM computers, we're somewhere between the vacuum-tube-powered machines of the 1940s and the models built on transistors, integrated circuits, and silicon of the 1960s. And we're much closer to the former.

"In some sense, we haven't even settled on the technology," Celia Merzbacher, executive director of the Quantum Economic Development Consortium, said. "We're not all on the same page with respect to what the transistor is going to be -- much less how the integrated circuit is going to work -- but we're benefiting a lot from those experiences from the semiconductor version of computing."

In her keynote on the state of play in quantum computing last week at Data Center World in Orlando, Merzbacher described a technology still in its infancy.

Progress in the field is closely tied to the amount of qubits (the basic units of quantum information) engineers manage to get to work in concert. A thousand logical qubits is thought to be the minimum for doing any meaningful, but you need a million physical qubits to have 1,000 logical ones. That's because physical qubits are sensitive to vibration, and you need so many of them "in order to correct for the noise and the errors in qubits today," she explained.

"I think when we make certain milestones, things may go pretty quickly through the scaling and getting to that million qubit system where we can do something useful."

Cooling Quantum Computers: "Any Temperature Is Really the Enemy'

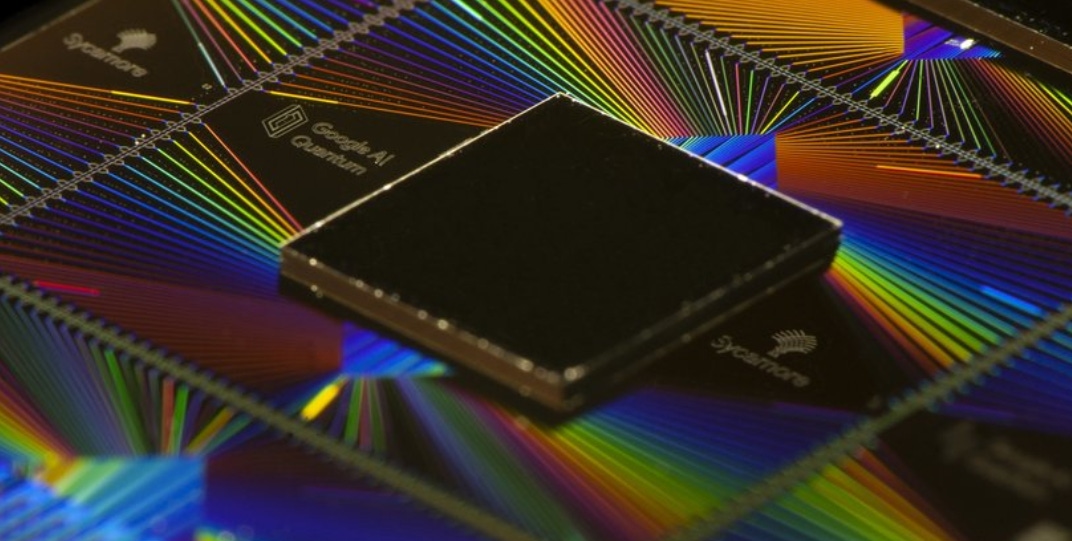

The quatum computers that tend to make headlines these days -- the ones built by IBM, Google, and Rigetti -- are based on superconducting qubits and require extremely low temperatures to operate: about -460F, or near absolute zero. Needless to say, cooling these machines is a major expense, especially given their large size.

As an example, Merzbacher pointed to the 10-by-6-foot cryogenic refrigeration system IBM is developing to house it's 1,000 qubit quantum machine. "They're going to keep that big of a volume at absolute zero, or millikelvin temperatures," she said.

While little is known about how much power it takes to cool IBM's quantum machines, there is data on cooling Google's quantum computers. Alan Ho, who until last month headed product management at Google Quantum AI, joined Merzbacher's keynote via a recording and said it took 25kW to cool one of their quantum computers.

He added that the custom electronics running the processor only needs 1kW at peak, meaning that despite the high cooling cost, their quantum computers are much more energy efficient than traditional computers given their speed. He said this was demonstrated in 2019 during a comparison study, in which a Google Sycamore quantum machine and the Oak Ridge National Laboratory's Summit (currently the world's second-fastest supercomputer, according to Top500) were running the same complex calculation.

"The result was around a billion times speedup on Google Sycamore processor over the Summit supercomputer," he said. "When we think about it from an energy perspective, a Summit supercomputer takes on the order around 14MW total, whereas the Google processor takes around 25kW, meaning that from a calculation standpoint it's about a trillion times more energy efficient on the Google quantum Sycamore processor over Oak Ridge Summit."

But Merzbacher said that several companies are working on designs that don't require such extreme cooling. The startup PsiQuantum is a developing photon-based quantum computer, for example, while Honeywell and another startup, IonQ, are working on "trapped ion" designs. In addition, the French startup Pasqal is developing a quantum computer using a "neutral atom" design.

Each of the technologies has its advantages and disadvantages, she said. "Some of them, like the trapped ions and neutral atoms, can be at room temperature. Although they all do better at low temperature because they're all very sensitive to noise, including thermal noise. So any temperature is really the enemy of a quantum system."

Early Promising Applications for Quantum Computers

Merzbacher stressed that quantum computers aren't going to replace conventional computers, but will primarily be used to run workloads that are impossible, or very difficult, to run on conventional computers.

"We don't really know what the killer app is going to be or what is going to be the area where they [quatum computers] have such an advantage that they're going to be very disruptive," she said. "Probably, even if we thought we knew, we would be wrong."

Although the exact workloads remain an unknown, computer scientists do have some ideas about areas where quantum computers will be valuable. One promising area, she said, is modeling biological processes. For example, scientists could use quantum computers to model energy states of chlorophyll to better understand how photosynthesis efficiently converts sunlight into energy.

"We haven't got a clue really how nature does that," Merzbacher said. "To be able to model systems like that takes a much different kind of computer. It just quickly scales beyond what a conventional computer can do."

Other areas include studying things like corrosion, to help industries design materials with desired qualities, as well as systems optimization, which will be useful for everything from helping the likes of Amazon and FedEx optimize routes for their massive fleets to helping financial institutions optimize investment portfolios.

"Machine learning is another area -- perhaps a little farther out -- where quantum computers may bring something to the table that current systems do not," she said.

Unfortunately, quantum computers are also expected to excel in breaking current encryption methods.

"Shor's algorithm is an algorithm for factoring prime numbers, and eventually it's believed that quantum computers are going to be able to run that algorithm and will actually break the prime number-based encryption standards that are widely used today," she said.

While quantum computers that will be able to break current encryption standards are thought to be 10 to 15 years away, it will take another 10 years or longer to migrate to a new, safer encryption standard, Merzbacher said, suggesting that it's probably a good idea to start figuring out what that standard is going to be now.

About the Author

You May Also Like