Microsoft Reveals First Hardware Using Its New Compression AlgorithmMicrosoft Reveals First Hardware Using Its New Compression Algorithm

The Corsica ASIC offloads compression and encryption to accelerate storage performance.

Two months ago, the engineering team that designs all the infrastructure for Microsoft Azure, unveiled a new data compression algorithm that according to them achieved double the compression ratios of the widely used zlib model, used, for example, by Linux, Mac OS X, and iOS.

The company said there were many potential use cases for it but didn’t share how it was going to use the technology itself. Last week, Microsoft revealed the first specific use case and hardware implementation of the technology as part of its hyperscale platform: a hardware accelerator designed to compress and encrypt data at lightning speeds before it gets stored in the cloud.

Microsoft said it developed – and open-sourced – the algorithm, developed under the codename Project Zipline, because the industry’s ability to crank out new storage capacity fast enough to keep up with the explosion of data into the foreseeable future is questionable.

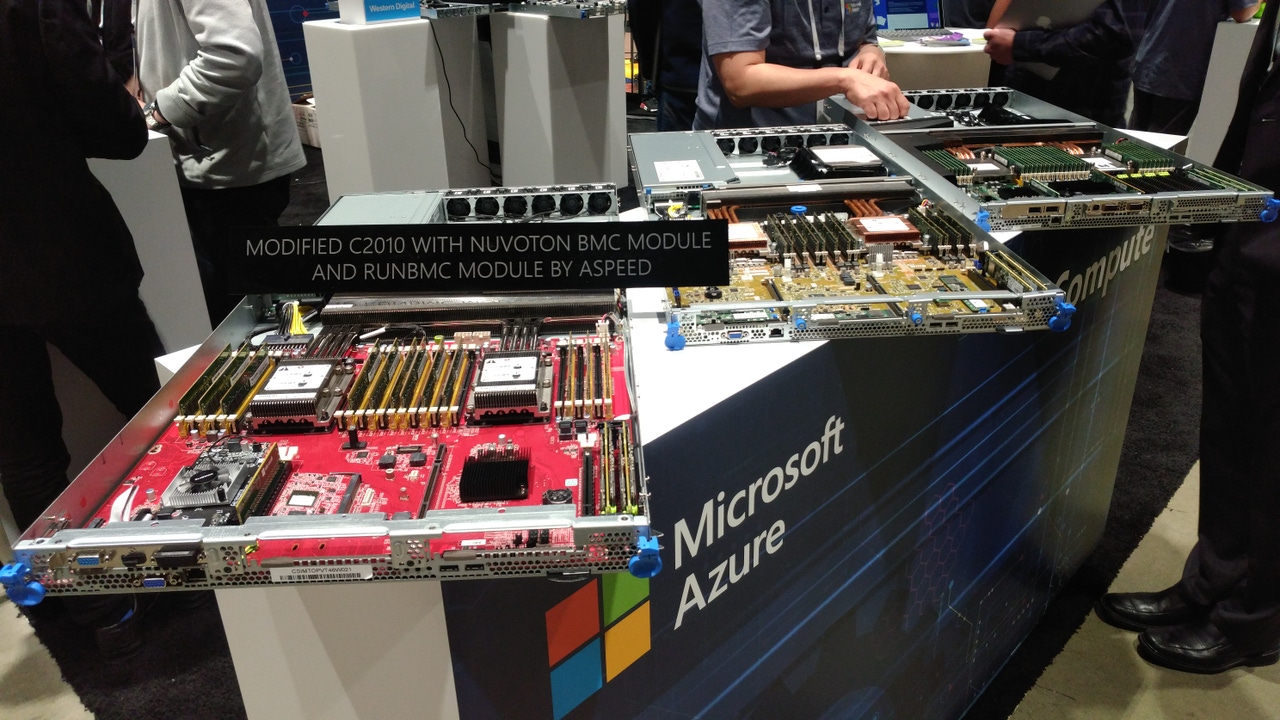

The company, one of the biggest Open Compute Project members, unveiled the compression technology at the OCP Global Summit in San Jose, California, in March. Testing it on data from typical cloud usage, Microsoft engineers saw Zipline shrink datasets to 4, 5, or 8 percent of their size in uncompressed form.

The data explosion companies are dealing with is driven primarily by telemetry data from all the new devices being connected to the internet and corporate networks, Kushagra Vaid, general manager of Azure Hardware Infrastructure, told Data Center Knowledge in an interview at the summit.

“Telemetry, generally speaking, whether it comes from IoT or other sources, that’s probably the biggest one,” he said. As new technologies like autonomous cars proliferate and more mundane things like elevators get connected, the volume of telemetry data is only going to continue exploding.

“That’s what I call the ‘modern dataset’ – all the new telemetry, sensor data,” Vaid said. “That’s why the data explosion is happening, because there are so many new devices and sensors coming online. Where does all that data go? You can either store the data, or you can process it and throw it away.”

Microsoft, it appears, prefers the former option, and Zipline is how it’s planning to cope with the enormous amount of data that needs to be stored.

Encryption and Compression Without a Performance Tax

In a blog post last week, Vaid revealed an ASIC card Microsoft designed together with Broadcom to speed up compression, encryption, and authentication of data stored in Azure. An accelerator is a dedicated piece of hardware, with its own silicon, designed to offload a certain function, or set of functions, from a system’s CPU (or another chip) to free up CPU resources to perform core computing functions.

By offloading encryption, compression, and authentication from Azure server CPUs to its custom ASICs, called Corsica, Microsoft can get more performance out of its servers. Corsica can also do all those things 15 to 25 times faster than CPUs, according to the company, cutting its current disk-write latency by nearly two-thirds:

microsoft corsica zipline asic chart

High-profile security breaches – including users failing to secure their Amazon cloud storage buckets, essentially leaving their data in open access – are now reported on a regular basis, while some tech giants – particularly Facebook – are under scrutiny after a series of embarrassing episodes revealed a cavalier attitude toward user privacy.

Encryption is going to be a big part of the solution to these problems, and Corsica was designed to ensure that encryption didn’t come with a performance tax. According to Microsoft, the accelerator “performs encryption in-line with compression, enabling pervasive encryption with zero system impact.”

It also combines multiple Zipline pipelines to provide 100Gbps throughput, so compression and encryption don’t create system bottlenecks.

Microsoft Seeds an Ecosystem

More implementations of Zipline are sure to come, and possibly not only from Microsoft.

The compression technology itself is open, but so is the register-transfer language (RTL), which essentially enables vendors to design silicon that can take advantage of Zipline. Opening up an RTL is rare in the industry and a first for OCP. But it means companies like Broadcom and its peers can now incorporate Zipline in everything from network cards and SSDs to CPUs, and that’s what Vaid is hoping will happen.

kushagra vaid microsoft ocp summit 19

Kushagra Vaid, general manager of Azure Hardware Infrastructure at Microsoft, speaking at the OCP Global Summit 2019

It’s likely, for example, that a supplier (or several) will implement the compression algorithm in SSDs built to Microsoft’s relatively new OCP spec called Denali. The spec defines an architecture that offloads much of an SSD’s management functionality to an accelerator or to the host CPU.

Vaid showed a prototype Denali SSD at the summit in March, but it will be up to vendors to turn the prototype into a finished product, he said. Because the Zipline RTL is open source, a vendor can implement the compression algorithm in Denali and get a ton of money from Microsoft, while also adding a piece of cutting-edge technology to their product portfolio.

“Microsoft buys a lot of silicon from the ecosystem,” Vaid told us. “The more people implement this, the more places we can put it into.”

About the Author

You May Also Like