Google More Than Doubles Its AI Chip Performance with TPU V4Google More Than Doubles Its AI Chip Performance with TPU V4

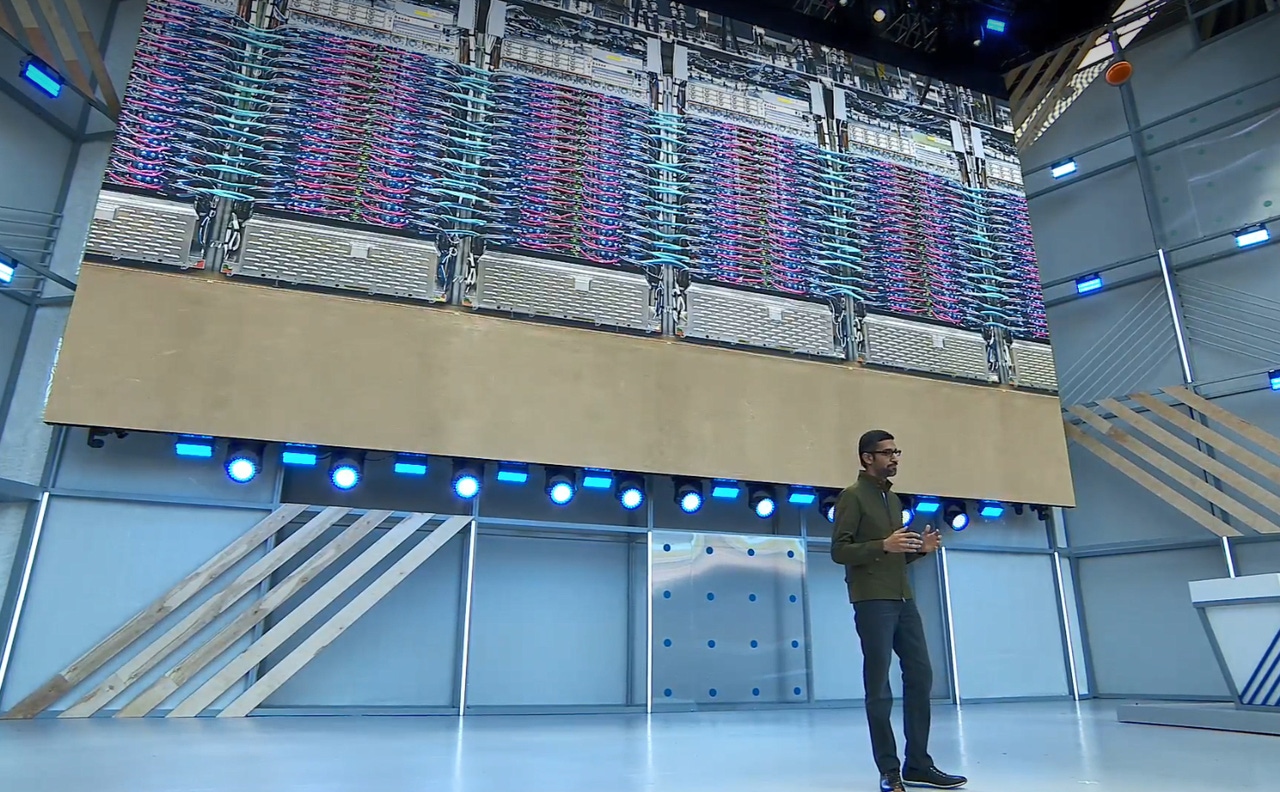

CEO Sundar Pichai said TPU V4 clusters were a “historic milestone” for the company.

Google says that it has designed a new AI chip that’s more than twice as fast as its previous version.

TPU V4 (TPU stands for Tensor Processing Unit) reaches an entirely new height in computing performance for AI software running in Google data centers. A single TPU V4 pod, a cluster of interconnected servers combining about 500 of these processors is capable of 1 exaFLOP performance, Google CEO Sundar Pichai said in his livestreamed Google IO keynote Tuesday morning. (1 exaFLOP is 1018 floating-point operations per second.)

That’s almost twice the peak performance of Fugaku, the Japanese system at the top of the latest Top500 list of the world’s fastest supercomputers. Fugaku’s peak performance is about 540,000 teraFLOPS.

Google has said in the past, however, that its TPU pods operate at lower numerical precision than traditional supercomputers do, making it easier to get to such speeds. Deep-learning models for things like speech or image recognition don’t require calculations nearly as precise as traditional supercomputer workloads, used to do things like simulating behavior of human organs or calculating space-shuttle trajectories.

“This is the fastest system we’ve ever deployed at Google and a historic milestone for us,” Pichai said.

A big part of what makes a TPU pod so fast is the interconnect technology that turns hundreds of individual processors into a single system. TPU pod features “10x interconnect bandwidth per chip at scale than any other networking technology,” said the CEO.

TPU V4 pods will be deployed at Google data centers “soon,” Pichai said.

Google announced its first custom AI chip, designed inhouse, in 2016. That first TPU ASIC provided “orders of magnitude” higher performance than the traditional combination of CPUs and GPUs – the most common architecture for training and deploying AI models.

With TPU V3, racks of these servers required so much power that Google had to retrofit its data centers to support liquid cooling, which enables much higher power densities than traditional air-cooled systems.

TPU V2 came in 2018 and TPU V3 the following year. Besides using these systems for its own AI applications, such as search suggestions, language translation, or voice assistant, Google rents TPU infrastructure, including entire TPU pods, to Google Cloud customers.

TPU V4 instances will be available to Google Cloud customers later this year, Pichai said.

Google and other hyperscalers have been increasingly designing custom chips on their own for specific use cases. Google has designed chips for security and more recently for more efficient transcoding of YouTube videos.

AWS offers cloud instances powered by its custom Arm-based Graviton chips. Microsoft is reportedly developing Arm chips for Azure servers and Surface PCs.

Read more about:

Google AlphabetAbout the Author

You May Also Like