Nvidia Says It's Achieved ‘Near-Bare-Metal’ AI Performance on vSphereNvidia Says It's Achieved ‘Near-Bare-Metal’ AI Performance on vSphere

Its new AI Enterprise software suite aims at simplifying AI for enterprises, which includes making it easier to do on VMware.

Nvidia and VMware say you no longer have to build custom solutions for accessing GPUs processing AI workloads from VMware virtual infrastructure. And, running AI workloads on virtualized infrastructure won’t give you performance that’s much worse than running them on bare metal.

Nvidia this week introduced a software suite designed to make it easier for enterprises to develop and deploy AI workloads on VMware-virtualized infrastructure, either in their own data centers or in the cloud.

The software, called Nvidia AI Enterprise, is a comprehensive set of AI tools and frameworks designed for VMware vSphere 7 Update 2, whose availability was announced this week as well.

“It’s a collection of tools that helps you get started with AI development [and deploy] AI into production,” Justin Boitano, VP and general manager of Nvidia’s Enterprise and Edge Computing, said in an interview with DCK.

Historically, most IT organizations have run AI workloads on bare-metal servers because running AI on virtual servers would result in a big performance hit, he said. However, Nvidia optimized its AI Enterprise software to provide enterprises “near-bare-metal” performance on vSphere, he wrote in a blog post.

Nvidia AI Enterprise, which can be deployed on premises using vSphere or in hybrid cloud environments using VMware Cloud Foundation, is targeted at enterprises in every industry, from healthcare and manufacturing to financial services, and will reduce AI model development time from 80 weeks to 8 weeks, the company said.

“You get into production a year faster,” Boitano said.

Analysts say Nvidia’s partnership with VMware is a natural fit. Nvidia has invested heavily in developing its AI hardware and software business and is the dominant supplier of accelerators used in supercomputers for machine learning and traditional high-performance computing workloads.

The two companies announced plans to integrate Nvidia’s AI software with vSphere, VMware Cloud Foundation, and Tanzu (VMware’s software portfolio for running Kubernetes on any infrastructure) at VMworld last September.

“This partnership totally makes sense,” Gary Chen, IDC’s research director of software-defined compute, told DCK. “To run AI, you need GPUs, and Nvidia makes the GPUs.”

The partnership is also important for VMware because it gives its customers a validated solution for virtualizing their AI workloads, he said.

“Everyone is doing something with AI. It’s a key workload,” Chen said. “In the past, you could access GPUs from VMware, but it was more ‘build it and engineer it yourself.’ Now, with the Nvidia and VMware collaboration, they have a tested, validated solution. This will cut down on a lot of the custom building. It’s less risk for the customer.”

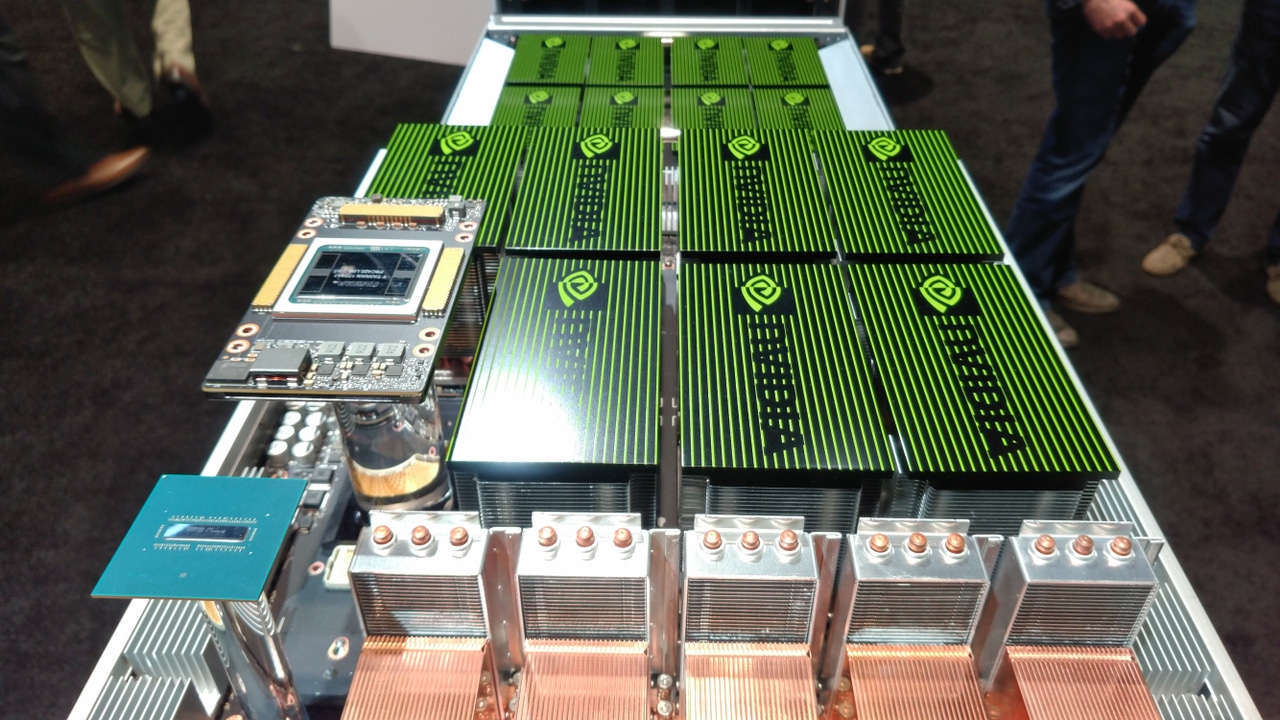

VMware vSphere 7 Update 2 is now certified for Nvidia A100 Tensor Core GPUs on Nvidia-certified systems, including those from Dell, Hewlett-Packard Enterprise, Lenovo, and Supermicro. This certification means that Nvidia will provide direct customer support to vSphere customers that purchase a license for Nvidia AI Enterprise, the companies said.

Nvidia AI Enterprise is available now to early-access customers, meaning you can apply for a test drive.

As part of Tuesday’s announcements, VMware announced that vSphere 7 Update 2 and vSAN 7 Update 2 are also available now.

New features include enhancements that simplify operations, more efficient storage and better security. More specifically, the updated vSphere supports encrypted containers on servers powered by AMD EPYC processors.

About the Author

You May Also Like