A Short Introduction to Quantum ComputingA Short Introduction to Quantum Computing

While the prospect of a quantum notebook or mobile phone look a very long way off, it’s likely that quantum computers will be widespread in academic and industrial settings – at least for certain applications – within the next three to five years.

December 15, 2021

In 1965, Intel co-founder Gordon Moore observed that the number of transistors per square inch on a microchip had doubled every year since their invention while the costs were cut in half – a phenomenon that became known as Moore’s Law.

More than 50 years of chip innovation have allowed transistors to get smaller and smaller until the point where it’s no longer physically possible to reduce the size of transistors any further. As a result, improvements in computing are slowing down and new ways to process information will need to be found if we want to continue to reap the benefits from a rapid growth in computing.

Enter Quantum computing – a radical new technology that could have a profound affect all our lives. It has, for example, the potential to transform medicine and revolutionize the fields of Artificial Intelligence and cybersecurity.

But what exactly is quantum computing and how does it vary from the computers we use today? In short, it is fundamentally different. Today’s computers operate using bits which are best thought of as tiny switches that can either be in the off position (zero) or in the on position (one). Ultimately all of today’s digital data – whether that’s a website or app you visit or image you download – comprise millions of bits made up of ones and zeroes.

How does quantum computing work?

However, instead of bits, a quantum computer uses what’s known as a qubit. The power of these qubits is their ability to scale exponentially so that a two-qubit machine allows for four calculations simultaneously, a three-qubit machine allows for eight calculations, and a four-qubit machine performs 16 simultaneous calculations.

According to Wired magazine, the difference between a traditional supercomputer and a quantum computer can best be explained by comparing the approaches that they might take in getting out of a maze. For example, a traditional computer will try every route in turn, ruling out each one until it finds the right one, whereas a quantum computer will go down every route at the same time. “It can hold uncertainty in its head,” claims Wired.

Rather than having a clear position, unmeasured quantum states occur in a mixed 'superposition', similar to a coin spinning through the air before it lands in your hand.

While a single qubit can’t do much, quantum mechanics has another phenomenon called ‘entanglement’, which allows qubits to be set up in a way so that their individual probabilities are affected by the other qubits in the system. For example, a quantum computer with two entangled qubits is a bit like tossing two coins at the same time and while they’re in the air every possible combination of heads and tails can be represented at once. The more qubits that are entangled together, the more combinations of information that can be simultaneously represented.

Key quantum applications

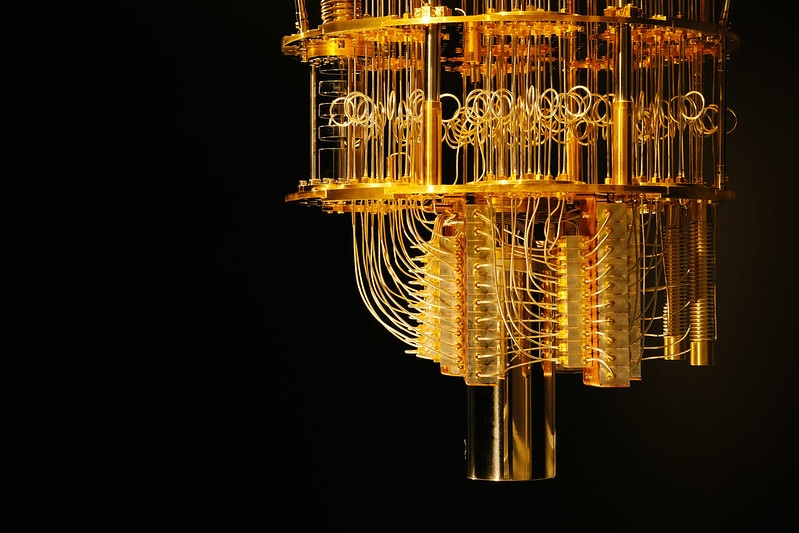

Building a quantum computer is not without its problems. Not only does it have to hold an object in a superposition state long enough to carry out various processes on them, but the technology is also extremely sensitive to noise and environmental effects. Quantum chips must be kept colder than outer space to create superpositions and information only remains quantum for so long before it is lost.

Nevertheless, researchers have predicted that quantum computers could help tackle certain types of problems, especially those involving a daunting number of variables and potential outcomes, like simulations or optimization questions. For example, they could be used to improve the software of self-driving cars, predict financial markets or model chemical reactions. Some scientists even believe quantum simulations could help find a breakthrough in beating diseases like Alzheimer’s.

Cryptography will be one key application. Currently, encryption systems rely on breaking down large numbers into prime numbers, a process called factoring. Whereas this a slow process for classical computers, for quantum computers it can be carried out very easily. As a result, all of our data could be put at risk if a quantum computer fell into the ‘wrong hands’. However, one way data could be protected is with quantum encryption keys which could not be copied or hacked.

There’s no question that quantum computing could be a revolutionary technology. And while the prospect of a quantum notebook or mobile phone look a very long way off, it’s likely that quantum computers will be widespread in academic and industrial settings – at least for certain applications - within the next three to five years.

About the Author

You May Also Like