HBM Chip Shortage: A New Bottleneck in the Data Center Supply ChainHBM Chip Shortage: A New Bottleneck in the Data Center Supply Chain

High-bandwidth memory is in even shorter supply than GPUs, potentially hindering the data center industry’s expansion plans.

Just as it seemed that the tech hardware supply chain was recovering from COVID-induced shortages, a new bottleneck has sprung up that could potentially impact the supply of data center GPUs and the expansion plans of data center developers.

In May, South Korean memory manufacturer SK Hynix announced that its supply of high-bandwidth memory (HBM) chips was sold out for 2024 and most of 2025. One of its competitors in the field, Micron, had issued a similar statement in March. Samsung, the last player in the HBM market, has made no public comment on product availability.

Amid the surge in AI and digital services, the HBM shortage poses a new challenge for data center expansion. While much focus has been placed on GPU bottlenecks, rising demand for high-bandwidth memory could further impact the industry’s growth plans.

What Are HBM Chips and Why Are They Needed in Data Centers?

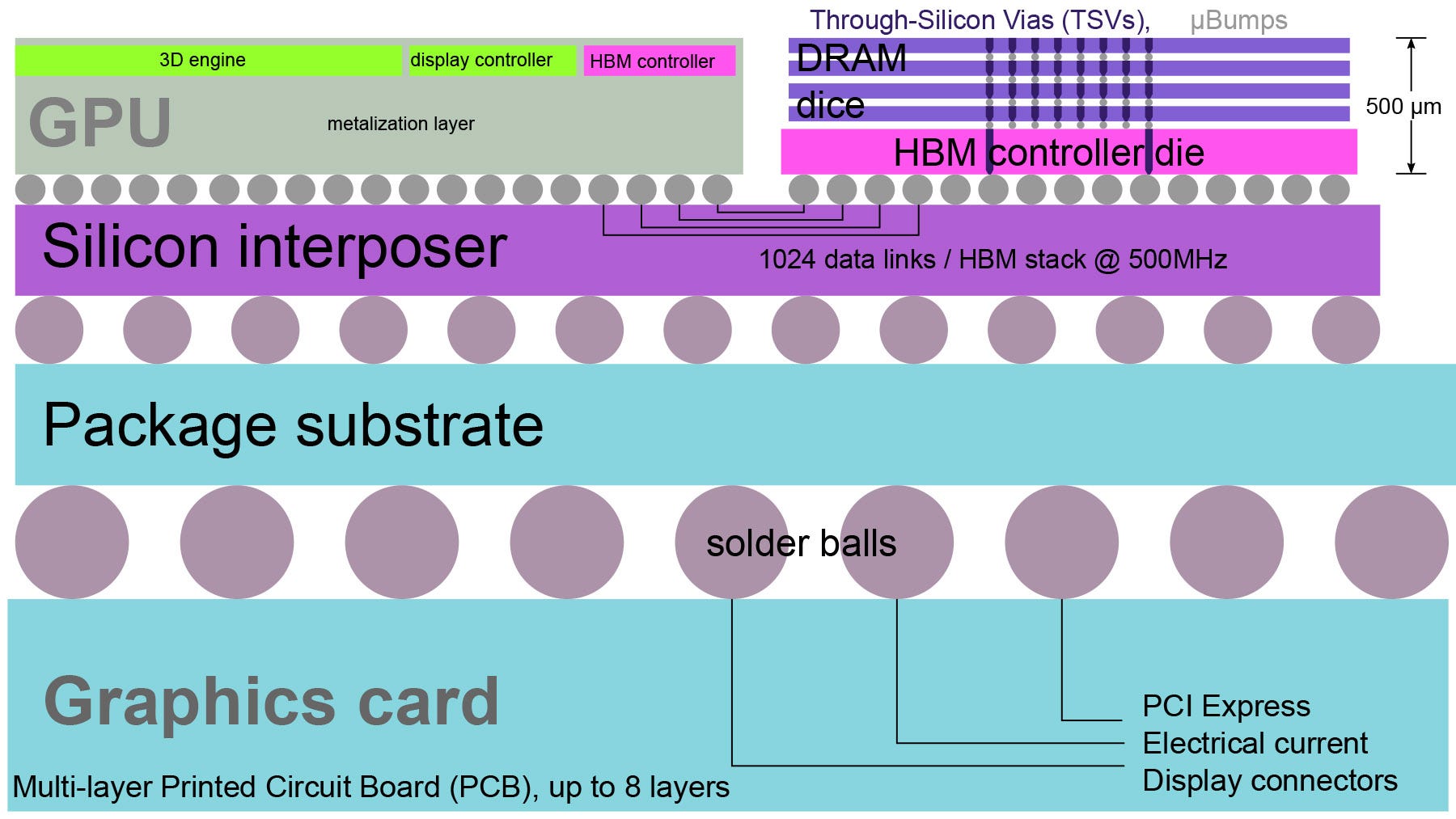

High-bandwidth memory is used in the actual GPU package itself, with the chips physically sitting next to the GPU silicon, as opposed to standard DRAM which is mounted on DIMM sticks and sits next to the CPU.

The HBM design provides much greater speed and reduced latency and is key to the performance of AI processing.

During the COVID-19 supply chain shortages, carmakers could not obtain chips for their vehicles, so they simply built cars without the chips and mothballed them until they could shore up inventory.

GPU vendors like Nvidia and AMD don’t have that option. No high-bandwidth memory means GPUs can’t be assembled because the HBM has to be added to the GPU package at the manufacturing stage.

It’s clearly a sensitive subject. When approached by Data Center Knowledge, data center hardware vendors Hynix, Micron, Samsung, Nvidia, Intel, and AMD all declined to comment on the issue.

Schematic of a graphics card that uses high-bandwidth memory (Image: Shmuel Csaba Otto Traian, Wikimedia Commons)

TrendForce predicts HBM’s share of the overall memory market will nearly double in 2024 – from 2% in 2023 to 5% this year. Looking further ahead, HBM’s market share is expected to surpass 10% of the overall memory market by 2025.

In terms of market value, high-bandwidth memory is projected to account for more than 20% of the total DRAM market value starting in 2024, potentially exceeding 30% by 2025.

Data Center Growth Continues, but HBM Shortage Could Pose Challenges

High-bandwidth memory is more expensive to make, more difficult to make, and takes longer to make than standard DRAM. So, it’s not like memory makers could pivot on a dime and switch to increasing their HBM production. Such fabrication plants, like a CPU fab, take time to build.

A shortage of data center products could impact the industry’s expansion and growth plans, but the supply chain is currently holding out. Data center construction marches on despite some companies having to wait for GPU hardware, notes Alan Howard, principal analyst for colocation and data center construction with Omdia.

Demand certainly isn’t slowing. Omdia projects that for 2024 for the 100 companies it tracks there are 37.7 million sq.ft and 6 GW of planned capacity estimated to come online globally.

“A GPU shortage is not likely to have a dramatic impact on data center construction plans in the foreseeable future,” Howard told Data Center Knowledge. “The only thing that might put a dent in data center construction, with regard to compute or AI hardware, would be a dramatic and long-term supply chain nightmare. Not likely, but like a pandemic, not impossible.”

And if a significant GPU shortage does happen? “It’ll be deal city,” said Jon Peddie, president of Jon Peddie Research, a tech hardware research firm. “Whichever one of those companies is willing to pay a premium to get at the head of the list, then they’ll get the first shipments, and then those kinds of deals are offered all the time.”

Survival of the Biggest?

The real problem is that this model doesn’t allow for any new entrants into the market in the event of an HBM bottleneck, said Anshel Sag, principal analyst with Moor Insights and Strategy.

“Nvidia is going to get the lion’s share of the HBM, but AMD and others have likely already put their orders in for a while,” Sag explained. “So, if you’re trying to launch something that uses HBM, and you haven’t already negotiated your supply, you’re probably not getting any.”

It also impacts smaller players like SambaNova, which makes dedicated AI processing servers using their own custom silicon and non-GPU parts. Sag points out that AMD’s Versal line of FPGA processors also uses high-bandwidth memory, and this may also suffer from further shortages.

Peddie says there’s already a backlog of GPUs on the order books. He expects Nvidia to ship 800,000 GPUs in Q2, but it could probably sell more. “They probably can’t meet the increase in demand, but they will meet 80% or more of the demand,” he said. “It’s just a little bit of discomfort. You know, it’s like, ‘Gee, I don’t get dessert tonight, but I had a great dinner’.”

Sag says the only way he could see memory makers expanding capacity aggressively would be if a vendor like Nvidia asked a third party to build up capacity. “Other companies… have done stuff like that with foundries. Companies like Apple and Qualcomm have provided tools and capital to foundries to accelerate their deployment of certain technologies. So, there is a precedent for chip vendors to provide incentives to foundries to expand capacity or improve their yields,” he said.

Beyond the HBM Shortage: Broader Expansion Challenges

Peddie predicts data centers currently under construction will get built out, but going forward, GPU and memory supply issues will be the least of their problems because data center operators and builders are struggling with other things. This includes obtaining real estate, sufficient power and cooling, and all the other factors that go into building a data center.

“The installed base will get to a certain point, it’ll start to approach demand and then customers will shrug it off because they will say we don't need any more boards right now we’ve got enough,” said Peddie. “And not only do we have enough, we have no place to put new ones.”

Read more about:

Chip WatchAbout the Author

You May Also Like