Incident Response: Lessons Learned from a Data Center FireIncident Response: Lessons Learned from a Data Center Fire

Industry expert James Monek explains how well-prepared teams can minimize operational disruption even in the face of major disasters.

June 4, 2024

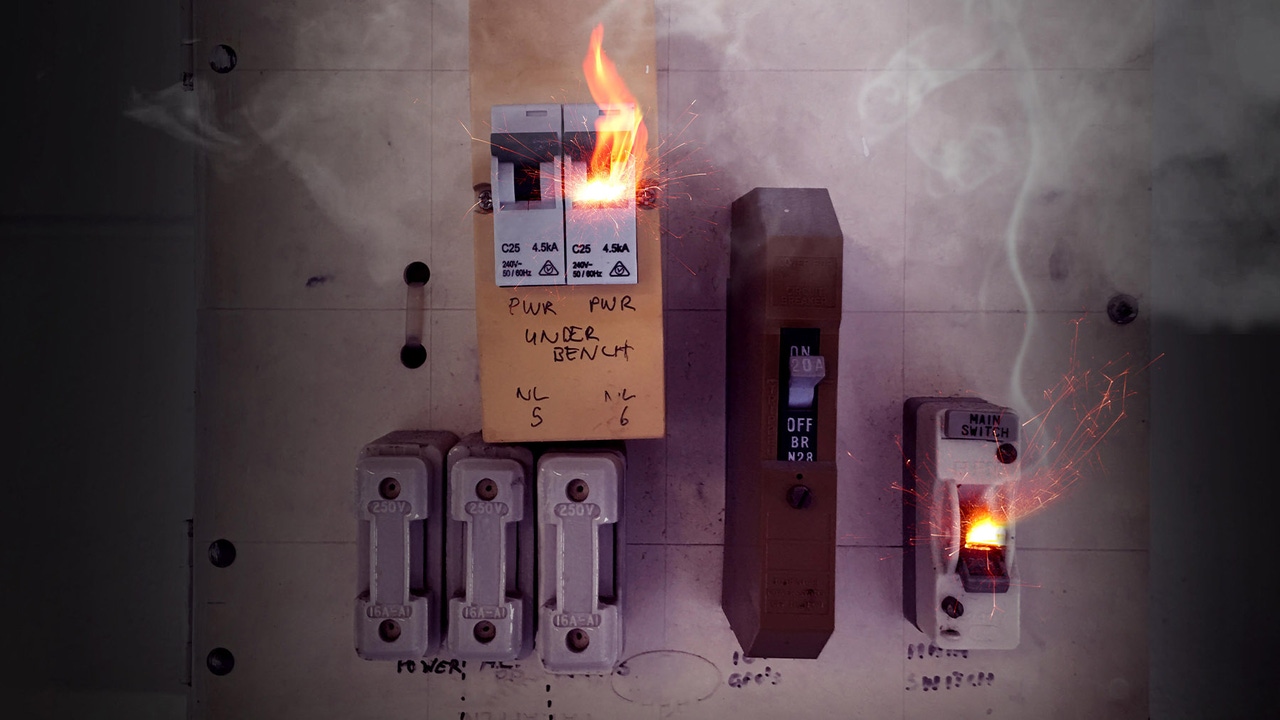

It’s 5am. You are nicely tucked up in bed. The phone rings. It’s a colleague explaining that there is a fire in your data center. You throw on some clothes and rush to work.

What you see there is disturbing. The fire triggered an emergency power-down and the halon system went off, dumping halon gas to protect the equipment. It’s a mess.

In this case, there was a happy ending. Sound incident management coupled with good business continuity and disaster recovery processes made it possible to get all services back online fast. Staff then conducted a review to determine what had gone well and what needed to be improved upon.

James Monek, director of technology infrastructure and operations at Lehigh University, Pennsylvania, explained the whole story during a session at Data Center World. He laid out the various surprises he and his team encountered, the many lessons learned, and the moral of the story: how well-prepared teams can work together under immense pressure to prevent disasters from having a devastating impact on operations.

Incident Response: First Steps

Some data center fires may be so serious that it is impossible to enter the premises. Fortunately, the fire was relatively minor on this occasion. However, it set off a chain of events that made it appear worse than it was.

Monek knew not to panic. He and his team had often prepared for this moment via disaster recovery and business continuity drills.

“We just needed to follow our established incident management process,” he said.

That process laid out who declared the emergency, the procedures to follow, who was ultimately responsible for resolving the incident, and the priorities in terms of which operations and service tiers should be recovered or addressed first and which could wait.

“Clear workflows for incident communication were also part of the equation, as well as spending time after resolution to investigate root causes, lessons learned, and make any revisions to existing incident response methodologies to cope better the next time a disaster occurs.”

Monke added: “Staff performed their roles well as part of a coordinated, divide and conquer approach. We arranged technical teams to focus on the recovery of resources and another team tasked with providing leadership with updates and to communicate to the wider college community.”

Data Center Fire: Incident Timeline

The alarm went off at shortly after 5am resulting in the power shutting off to the entire data center and the fire suppression system pumping halon gas into the facility. Monek and others arrived onsite before 6am. They followed their preset priorities to restore power and online access to critical resources and priority applications. By shortly after 10am, most critical resources were back online. The team continued to work through the list of priorities. By 5pm, all services were available once again.

The next day, halon tanks were removed from the data center and sent out to be refilled. Five days later, refilled tanks were installed. This was the final element needed to restore the fire system to full functionality.

“Seven days after the incident, the fire suppression system was reconfigured, passed an inspection and was brought online,” said Monek.

Incident Review

When the gas dispersed, the dust settled and normal service had resumed, it was time for what Monek terms a “blameless retrospective.”

“The best approach is to focus on continuous improvement when discussing observations and findings, both positive and negative,” he explained.

Everything was documented and meetings were scheduled with specific team members on follow-up actions to resolve problems. 35 to-do list items were logged into the ticketing system.

Monek stressed the value of stressing what went right. In this case, the fire suppression system worked as designed, the staff made it onsite quickly despite snowy conditions, and video conferencing software was utilized to keep everyone informed.

Additionally, the staff didn’t panic, and everyone adhered to incident response processes correctly. Thus, the resolution happened on the same day for all services.

“We were happy that our data center power-up process worked perfectly,” said Monek.

That said, areas in need of improvement were isolated. The biggest challenges experienced during the incident were related to the storage area network (SAN).

“While most of the redundancy built into the services worked as design, many services were unavailable,” said Monek. “SAN issues were the root cause of many services such as single sign-on, websites, cloud services, and the phone system being inaccessible.”

As the Lehigh and Library and Technology Services (LTS) websites were down, key channels to effectively communicate with the college community were unavailable. Further, the video system wouldn’t function as it was tied directly to data center power. Phone lists, too, were inaccessible as they were available online only. Finally, Monek noted that there was some confusion present about which services were the most critical. Despite all these hurdles, the data center team resolved everything within one day.

Lessons Learned

Monek laid out several lessons learned due to the data center fire experience. He is now a firm believer in following an incident management process no matter the size of the incident, as well as the value of disaster recovery documentation and having calling lists available beyond an online listing.

It became clear that separate technical and leadership teams were needed in such situations. Someone needs to be the go-to person for organization leadership, take their calls, and provide them with regular updates. That team also has a responsibility to get the word out effectively to all stakeholders impacted by the outage.

“We found it helpful to develop a more detailed order of operations list for future incidents and to thoroughly test for resiliency,” said Monek. “The bottom line is to trust your teams.”

Data Center Outages are Costly

The rigor of Lehigh’s disaster recovery processes is well-founded. Data center outages are more expensive than ever.

“When outages occur, they are becoming more expensive, a trend likely to continue as dependency on digital services increases,” said Bill Kleyman, Data Center World Program Chair. “With over two-thirds of all outages costing more than $100,000, the business case for investing more in resiliency – and training – is becoming stronger.”

While fires are relatively rare, power outages remain commonplace. Uptime Institute surveys show that 55% of data center operators have had an outage at their site in the past three years. In addition, 4% of operators suffered a severe outage in the past three years, and 6% said they had experienced a serious outage.

“There is a lower frequency of serious or severe outages of late, but those that do occur are often very expensive,” said Uptime Institute CTO Chris Brown.

About the Author

You May Also Like