Insight and analysis on the data center space from industry thought leaders.

Getting Rid of the Deep Learning Silo in the Data CenterGetting Rid of the Deep Learning Silo in the Data Center

Cloud computing was a great solution to capacity management problems. Then came deep learning.

November 10, 2020

jay shenoy hyve headshot

Jay Shenoy is VP of Technology at Hyve Solutions. With an electrical engineering education from Purdue University (PhD) and the Indian Institute of Technology Bombay (BS, MS), and nearly 25 years of experience in the semiconductor, systems, and hyperscale service provider industries, he has a broad perspective on hardware design and deployment.

The elasticity of cloud infrastructure is a key enabler for enterprises and internet services, creating a shared pool of compute resources that various tenants can draw from as their workloads ebb and flow. Cloud tenants are spared the details of capacity and supply planning. Cloud providers, whether public or in-house private cloud, benefit from having multiple tenants as interchangeable users of the same underlying hardware. This worked well because modern server systems are very efficient at a multitude of general computing tasks. Deep learning, however, creates new complexities for this model.

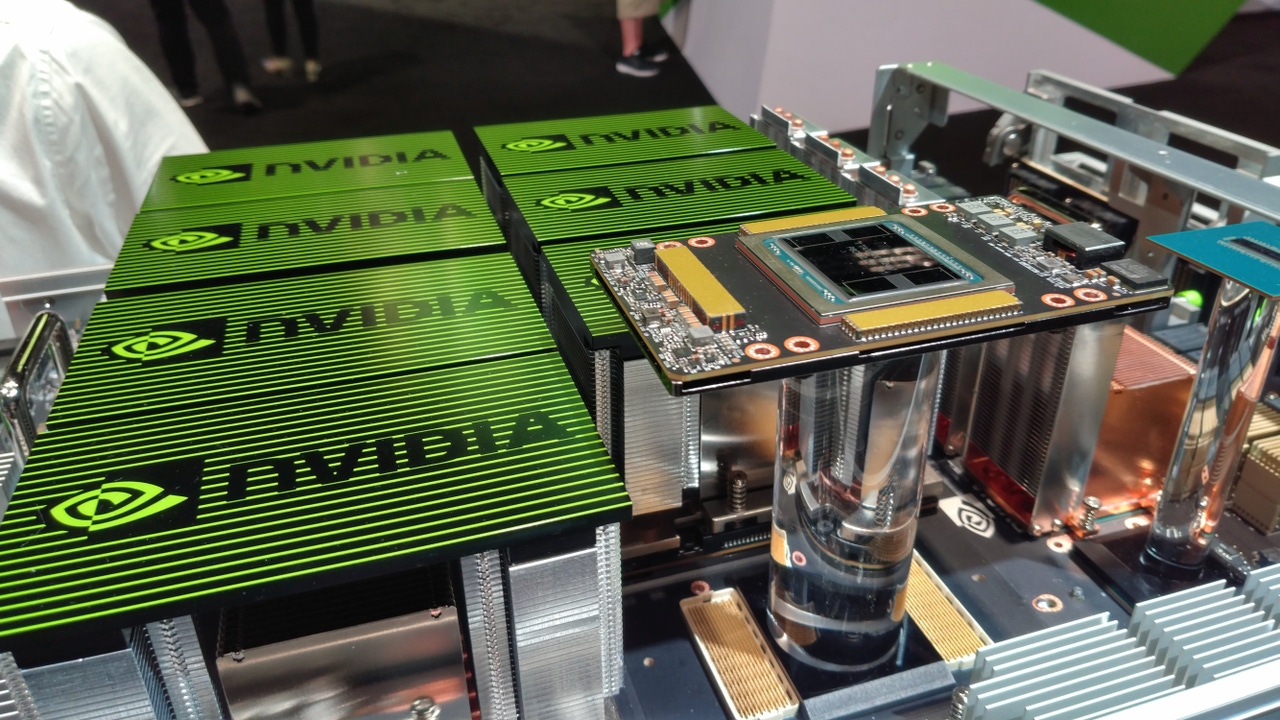

Deep learning has multiple characteristics that differ from general compute and lead to heavy use of GPU-based systems that are distinct from general compute systems. Additionally, deep learning has evolved into separating training on a single node, distributed training, and inference, which even in GPU-based systems is optimally done on three different types of servers.

This specialization of hardware, while undoubtedly leading to significant improvements in performance, runs counter to the elasticity of clouds. With specialized hardware, the cloud infrastructure provider needs to maintain pools of general compute, training, distributed training, and inference clusters. Tenants are interchangeable only within the pools, and the capacity and supply planning of each of the pools must be accomplished separately.

Figure 1 shows a simplified example of two pools of hardware and how the capacity planning uncertainties are additive to the overall capacity planning problem:

fungible ip fig1

There will be a place for specialized hardware for deep learning in the foreseeable future. However, what we are learning is that deep learning is a spectrum of applications, and not all of them benefit equally from acceleration by a GPU. Moreover, general-purpose CPUs, originally optimized for scalar processing, have improved vector processing significantly and can be expected to adopt matrix processing capabilities in the future. The reality is that not all deep learning models have large data sets requiring large training clusters, particularly when pre-existing models are used as a starting point and then tweaked with re-training.

As such, if we have CPUs with additional features for vector and matrix computation, then it makes sense to optimize servers for deep learning. As a first step, building large servers helps. An eight-socket server has four times the compute capability as a standard two-socket server, and that is very beneficial for deep learning. Adding network capability to such a server enhances its performance in clusters for distributed training, and with nx100GbE, we have a scalable interconnect for this purpose. With the help of a cloud scheduling platform, such as Kubernetes, such a large server remains equally adept at general computing tasks. Such a server becomes a fungible computing element that can be used for all computing tasks, all inference applications, and a significant number of non-demanding training applications in a converged infrastructure.

Let’s revisit the example in Figure 1 with a fungible computing system. While such a server is adequate for a sufficiently large number of low-end deep learning use cases, with a fungible computing system, we can also reserve specialized hardware for the most demanding deep learning workloads. Deep learning training is a great workload for a converged fungible infrastructure due to it being a batch process and, hence, pre-emptible in the face of increased demand for general purpose compute.

fungible ip fig2

Under these scenarios, we can reduce the planning uncertainties for both general compute and specialized deep learning hardware by creating a pool of fungible computing, as seen in Figure 2. In this example, the addition of a new fungible pool of servers reduces overall capacity uncertainties. Thus, fungible computing provides a valuable mechanism for simplifying capacity planning of cloud infrastructures.

Opinions expressed in the article above do not necessarily reflect the opinions of Data Center Knowledge and Informa.

Industry Perspectives is a content channel at Data Center Knowledge highlighting thought leadership in the data center arena. See our guidelines and submission process for information on participating.

About the Author

You May Also Like